Data Exploration#

Table df_orders#

Let’s look at the information about the dataframe.

df_orders.explore.info()

| Summary | Column Types | |||

|---|---|---|---|---|

| Rows | 99.44k | Text | 2 | |

| Features | 8 | Categorical | 1 | |

| Missing cells | 4.91k (<1%) | Int | 0 | |

| Exact Duplicates | --- | Float | 0 | |

| Fuzzy Duplicates | --- | Datetime | 5 | |

| Memory Usage (Mb) | 19 | |||

Initial Column Analysis#

We will examine each column individually.

order_id

df_orders.order_id.explore.info(plot=False)

| Summary | Text Metrics | |||

|---|---|---|---|---|

| Total Values | 99.44k (100%) | Avg Word Count | 1.0 | |

| Missing Values | --- | Max Length (chars) | 32.0 | |

| Empty Strings | --- | Avg Length (chars) | 32.0 | |

| Distinct Values | 99.44k (100%) | Median Length (chars) | 32.0 | |

| Non-Duplicates | 99.44k (100%) | Min Length (chars) | 32.0 | |

| Exact Duplicates | --- | Most Common Length | 32 (100.0%) | |

| Fuzzy Duplicates | --- | Avg Digit Ratio | 0.63 | |

| Values with Duplicates | --- | |||

| Memory Usage | 8 | |||

customer_id

df_orders.customer_id.explore.info(plot=False)

| Summary | Text Metrics | |||

|---|---|---|---|---|

| Total Values | 99.44k (100%) | Avg Word Count | 1.0 | |

| Missing Values | --- | Max Length (chars) | 32.0 | |

| Empty Strings | --- | Avg Length (chars) | 32.0 | |

| Distinct Values | 99.44k (100%) | Median Length (chars) | 32.0 | |

| Non-Duplicates | 99.44k (100%) | Min Length (chars) | 32.0 | |

| Exact Duplicates | --- | Most Common Length | 32 (100.0%) | |

| Fuzzy Duplicates | --- | Avg Digit Ratio | 0.62 | |

| Values with Duplicates | --- | |||

| Memory Usage | 8 | |||

order_status

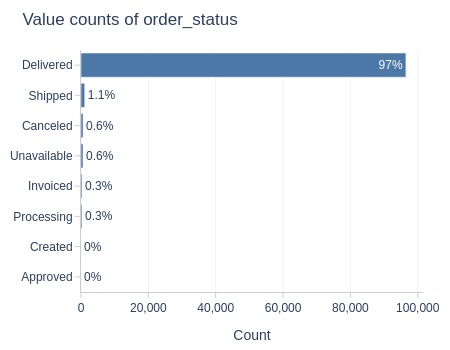

df_orders.order_status.explore.info()

| Summary | Text Metrics | Value Counts | |||||

|---|---|---|---|---|---|---|---|

| Total Values | 99.44k (100%) | Avg Word Count | 1.0 | Delivered | 96.48k (97%) | ||

| Missing Values | --- | Max Length (chars) | 11.0 | Shipped | 1.11k (1%) | ||

| Empty Strings | --- | Avg Length (chars) | 9.0 | Canceled | 625 (<1%) | ||

| Distinct Values | 8 (<1%) | Median Length (chars) | 9.0 | Unavailable | 609 (<1%) | ||

| Non-Duplicates | --- | Min Length (chars) | 7.0 | Invoiced | 314 (<1%) | ||

| Exact Duplicates | 99.43k (99%) | Most Common Length | 9 (97.0%) | Processing | 301 (<1%) | ||

| Fuzzy Duplicates | 99.43k (99%) | Avg Digit Ratio | 0.00 | Created | 5 (<1%) | ||

| Values with Duplicates | 8 (<1%) | Approved | 2 (<1%) | ||||

| Memory Usage | <1 Mb | ||||||

Key Observations:

97% of all orders were delivered

order_purchase_dt

df_orders.order_purchase_dt.explore.info()

| Summary | Data Quality Stats | Temporal Stats | |||||

|---|---|---|---|---|---|---|---|

| First date | 2016-09-04 | Values | 99.44k (100%) | Missing Years | --- | ||

| Last date | 2018-10-17 | Zeros | --- | Missing Months | 1 (4%) | ||

| Avg Days Frequency | 0.01 | Missings | --- | Missing Weeks | 11 (10%) | ||

| Min Days Interval | 0 | Distinct | 98.88k (99%) | Missing Days | 140 (18%) | ||

| Max Days Interval | 62 | Duplicates | 566 (<1%) | Weekend Percentage | 23.0% | ||

| Memory Usage | 1 | Dup. Values | 556 (<1%) | Most Common Weekday | Monday | ||

Key Observations:

In order_purchase_dt missing 4% of months, 10% of weeks, 18% of days

order_approved_dt

df_orders.order_approved_dt.explore.info()

| Summary | Data Quality Stats | Temporal Stats | |||||

|---|---|---|---|---|---|---|---|

| First date | 2016-09-15 | Values | 99.28k (99%) | Missing Years | --- | ||

| Last date | 2018-09-03 | Zeros | --- | Missing Months | 1 (4%) | ||

| Avg Days Frequency | 0.01 | Missings | 160 (<1%) | Missing Weeks | 11 (11%) | ||

| Min Days Interval | 0 | Distinct | 90.73k (91%) | Missing Days | 108 (15%) | ||

| Max Days Interval | 66 | Duplicates | 8.71k (9%) | Weekend Percentage | 21.4% | ||

| Memory Usage | 1 | Dup. Values | 7.04k (7%) | Most Common Weekday | Tuesday | ||

Key Observations:

In order_approved_dt 160 missing values (<1% of total rows)

In order_approved_dt missing 4% of months, 11% of weeks, 15% of days

order_delivered_carrier_dt

df_orders.order_delivered_carrier_dt.explore.info()

| Summary | Data Quality Stats | Temporal Stats | |||||

|---|---|---|---|---|---|---|---|

| First date | 2016-10-08 | Values | 97.66k (98%) | Missing Years | --- | ||

| Last date | 2018-09-11 | Zeros | --- | Missing Months | --- | ||

| Avg Days Frequency | 0.01 | Missings | 1.78k (2%) | Missing Weeks | 2 (2%) | ||

| Min Days Interval | 0 | Distinct | 81.02k (81%) | Missing Days | 157 (22%) | ||

| Max Days Interval | 20 | Duplicates | 18.42k (19%) | Weekend Percentage | 1.9% | ||

| Memory Usage | 1 | Dup. Values | 10.09k (10%) | Most Common Weekday | Tuesday | ||

Key Observations:

In order_delivered_carrier_dt 1.78k missing values (2% of total rows).

In order_delivered_carrier_dt missing 2% of weeks, 22% of days.

order_delivered_customer_dt

df_orders.order_delivered_customer_dt.explore.info()

| Summary | Data Quality Stats | Temporal Stats | |||||

|---|---|---|---|---|---|---|---|

| First date | 2016-10-11 | Values | 96.48k (97%) | Missing Years | --- | ||

| Last date | 2018-10-17 | Zeros | --- | Missing Months | --- | ||

| Avg Days Frequency | 0.01 | Missings | 2.96k (3%) | Missing Weeks | 3 (3%) | ||

| Min Days Interval | 0 | Distinct | 95.66k (96%) | Missing Days | 92 (12%) | ||

| Max Days Interval | 13 | Duplicates | 3.78k (4%) | Weekend Percentage | 6.9% | ||

| Memory Usage | 1 | Dup. Values | 804 (<1%) | Most Common Weekday | Monday | ||

Key Observations:

In order_delivered_customer_dt 2.96k missing values (3% of total rows).

In order_delivered_customer_dt missing 3% of weeks, 12% of days.

order_estimated_delivery_dt

df_orders.order_estimated_delivery_dt.explore.info(plot=False)

| Summary | Data Quality Stats | Temporal Stats | |||||

|---|---|---|---|---|---|---|---|

| First date | 2016-09-30 | Values | 99.44k (100%) | Missing Years | --- | ||

| Last date | 2018-11-12 | Zeros | --- | Missing Months | --- | ||

| Avg Days Frequency | 0.01 | Missings | --- | Missing Weeks | 5 (4%) | ||

| Min Days Interval | 0 | Distinct | 459 (<1%) | Missing Days | 315 (41%) | ||

| Max Days Interval | 16 | Duplicates | 98.98k (99%) | Weekend Percentage | 0.0% | ||

| Memory Usage | 1 | Dup. Values | 438 (<1%) | Most Common Weekday | Wednesday | ||

Key Observations:

In order_estimated_delivery_dt missing 4% of weeks, 41% of days.

Adding Temporary Dimensions#

To study anomalies across different dimensions, we will add temporary metrics.

We will prefix their names with ‘tmp_’ to indicate that these are temporary metrics to be removed later.

They are temporary because the data may change after preprocessing.

Therefore, the primary metrics will be created after preprocessing.

Let’s check the initial DataFrame size and save it to ensure no data is lost later.

print(df_orders.shape[0])

tmp_ids = df_orders.order_id

99441

tmp_df_reviews = (

df_reviews.groupby('order_id', as_index=False)

.agg(tmp_avg_reviews_score = ('review_score', 'mean'))

)

tmp_df_reviews['tmp_avg_reviews_score'] = np.floor(tmp_df_reviews['tmp_avg_reviews_score']).astype(int).astype('category')

tmp_df_payments = (

df_payments.groupby('order_id', as_index=False)

.agg(tmp_payment_types = ('payment_type', lambda x: ', '.join(x.unique())))

)

tmp_df_items = (

df_items.merge(df_products, on='product_id', how='left')

.assign(product_category_name = lambda x: x['product_category_name'].cat.add_categories(['missed in df_products']))

.fillna({'product_category_name': 'missed in df_products'})

.groupby('order_id', as_index=False)

.agg(tmp_product_categories = ('product_category_name', lambda x: ', '.join(x.unique())))

)

df_orders = (

df_orders.merge(tmp_df_reviews, on='order_id', how='left')

.merge(tmp_df_payments, on='order_id', how='left')

.merge(tmp_df_items, on='order_id', how='left')

.merge(df_customers[['customer_id', 'customer_state']], on='customer_id', how='left')

.rename(columns={'customer_state': 'tmp_customer_state'})

)

df_orders['tmp_product_categories'] = df_orders['tmp_product_categories'].fillna('Missing in Items').astype('category')

df_orders['tmp_payment_types'] = df_orders['tmp_payment_types'].fillna('Missing in Pays').astype('category')

df_orders['tmp_order_purchase_month'] = df_orders['order_purchase_dt'].dt.month_name().fillna('Missing purchase dt').astype('category')

df_orders['tmp_order_purchase_weekday'] = df_orders['order_purchase_dt'].dt.day_name().fillna('Missing purchase dt').astype('category')

conditions = [

df_orders['order_purchase_dt'].isna()

, df_orders['order_purchase_dt'].dt.hour.between(4,11)

, df_orders['order_purchase_dt'].dt.hour.between(12,16)

, df_orders['order_purchase_dt'].dt.hour.between(17,22)

, df_orders['order_purchase_dt'].dt.hour.isin([23, 0, 1, 2, 3])

]

choices = ['Missing purchase dt', 'Morning', 'Afternoon', 'Evening', 'Night']

df_orders['tmp_purchase_time_of_day'] = np.select(conditions, choices, default='Missing purchase dt')

df_orders['tmp_purchase_time_of_day'] = df_orders['tmp_purchase_time_of_day'].astype('category')

conditions = [

df_orders['order_delivered_customer_dt'].isna() | df_orders['order_estimated_delivery_dt'].isna()

, df_orders['order_delivered_customer_dt'] > df_orders['order_estimated_delivery_dt']

, df_orders['order_delivered_customer_dt'] <= df_orders['order_estimated_delivery_dt']

]

choices = ['Missing delivery dt', 'Delayed', 'Not Delayed']

df_orders['tmp_is_delayed'] = np.select(conditions, choices, default='Missing delivery dt')

df_orders['tmp_is_delayed'] = df_orders['tmp_is_delayed'].astype('category')

conditions = [

df_orders['order_status'].isna(),

df_orders['order_status'] == 'Delivered',

df_orders['order_status'] != 'Delivered',

]

choices = ['Missing Status', 'Delivered', 'Not Delivered']

df_orders['tmp_is_delivered'] = np.select(conditions, choices, default='Missing Status')

df_orders['tmp_is_delivered'] = df_orders['tmp_is_delivered'].astype('category')

del tmp_df_reviews, tmp_df_payments, tmp_df_items

Verified that nothing was lost.

df_orders.shape[0]

99441

set(df_orders.order_id) == set(tmp_ids)

True

All good.

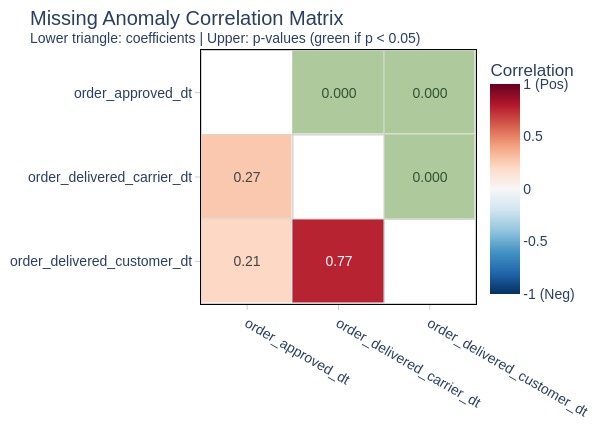

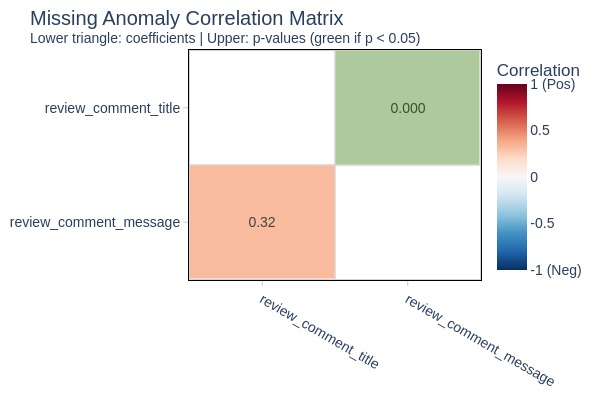

Exploring Missing Values#

Let’s examine which columns contain missing values.

df_orders.explore.anomalies_report(

anomaly_type='missing'

, width=600

)

| Count | Percent | |

|---|---|---|

| order_approved_dt | 160 | 0.2% |

| order_delivered_carrier_dt | 1783 | 1.8% |

| order_delivered_customer_dt | 2965 | 3.0% |

| order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | |

|---|---|---|---|

| order_approved_dt | |||

| order_delivered_carrier_dt | < 8.2% / ^ 91.2% | ||

| order_delivered_customer_dt | < 4.9% / ^ 91.2% | < 60.1% / ^ 99.9% |

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_is_delayed | Missing delivery dt | 2965 | 2965 | 100.0% | 3.0% | 99.5% | 96.5% |

| tmp_is_delivered | Not Delivered | 2963 | 2957 | 99.8% | 3.0% | 99.2% | 96.2% |

| tmp_avg_reviews_score | 1 | 11756 | 2086 | 17.7% | 11.8% | 70.0% | 58.2% |

| order_status | Shipped | 1107 | 1107 | 100.0% | 1.1% | 37.1% | 36.0% |

| tmp_product_categories | Missing in Items | 775 | 775 | 100.0% | 0.8% | 26.0% | 25.2% |

| order_status | Canceled | 625 | 619 | 99.0% | 0.6% | 20.8% | 20.1% |

| order_status | Unavailable | 609 | 609 | 100.0% | 0.6% | 20.4% | 19.8% |

| order_status | Invoiced | 314 | 314 | 100.0% | 0.3% | 10.5% | 10.2% |

| order_status | Processing | 301 | 301 | 100.0% | 0.3% | 10.1% | 9.8% |

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 95049 | aef214f769de82d355c5d2ab49e15891 | d83a72326816260884edd05238aaa508 | Invoiced | 2016-10-06 13:07:13 | 2016-10-06 16:03:13 | NaT | NaT | 2016-12-02 00:00:00 | 5 | Credit Card | telefonia | SP | October | Thursday | Afternoon | Missing delivery dt | Not Delivered |

| 14994 | 263f5778d1130e9c186958780172a107 | 7324ecb0ff143f561193d22bea7d63fb | Canceled | 2017-10-12 08:39:30 | 2017-10-12 09:06:26 | NaT | NaT | 2017-11-06 00:00:00 | 1 | Credit Card, Voucher | dvds_blu_ray | SP | October | Thursday | Morning | Missing delivery dt | Not Delivered |

| 3786 | b07abc8b9acaf00e79b4657419f469f3 | 15308b044c9608fc82e57f2e5d6878f6 | Unavailable | 2017-03-09 17:45:04 | 2017-03-09 17:45:04 | NaT | NaT | 2017-03-28 00:00:00 | 1 | Credit Card | Missing in Items | SP | March | Thursday | Evening | Missing delivery dt | Not Delivered |

| 87216 | 560f8dd770a2b2c009a4fc935c507014 | 8ba0374666bfb38c409875762a5af81e | Shipped | 2017-12-16 18:51:09 | 2017-12-16 19:10:22 | 2017-12-19 20:28:56 | NaT | 2018-01-12 00:00:00 | 1 | Credit Card | telefonia | RJ | December | Saturday | Evening | Missing delivery dt | Not Delivered |

| 72190 | 3757dc827b45811e32f858867877df67 | 442c2a88842315cb04a3d46658371689 | Processing | 2017-08-21 10:59:48 | 2017-08-23 02:46:19 | NaT | NaT | 2017-09-14 00:00:00 | 2 | Boleto | informatica_acessorios | SP | August | Monday | Morning | Missing delivery dt | Not Delivered |

Key Observations:

Missing values in these columns likely belong to orders that did not reach a certain status.

We will analyze missing values in each column separately.

Missing in order_approved_dt

tmp_miss = df_orders[df_orders['order_approved_dt'].isna()]

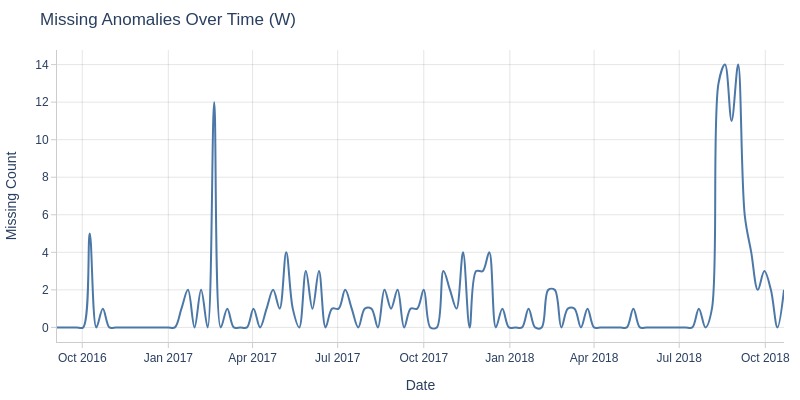

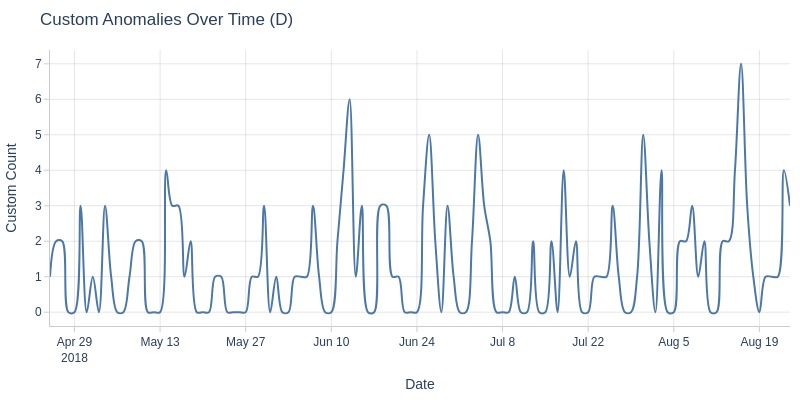

Let’s examine missing values in payment approval time over time. Time will be based on order creation time.

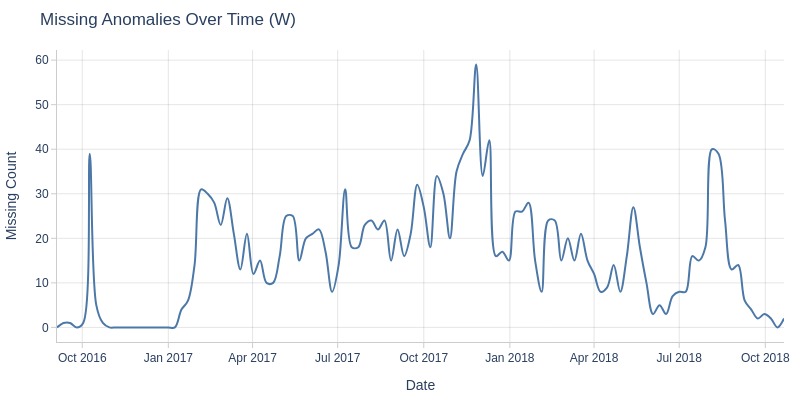

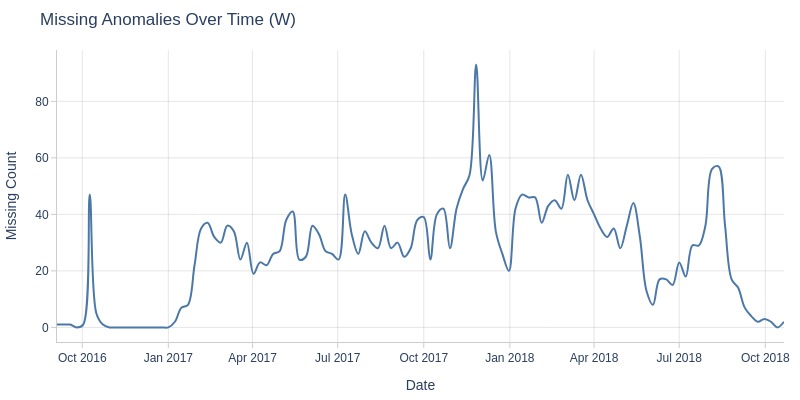

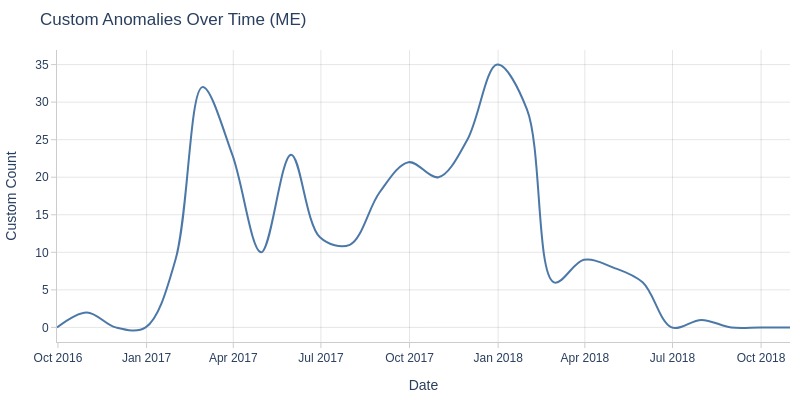

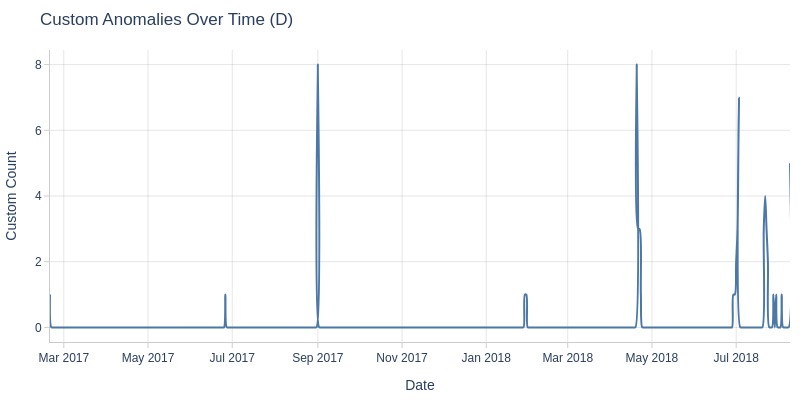

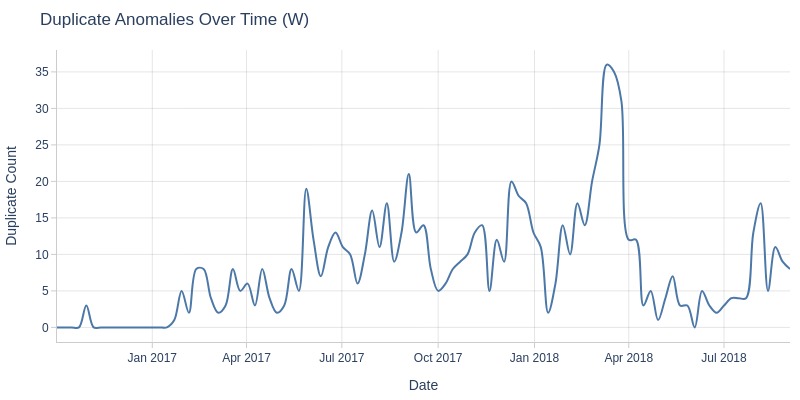

df_orders['order_approved_dt'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, anomaly_type='missing'

, freq='W'

)

Key Observations:

In February 2017 and August 2018, there was a spike in orders missing payment approval timestamps.

Let’s analyze by order status.

df_orders['order_approved_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='order_status'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| order_status | Canceled | 625 | 141 | 22.6% | 0.6% | 88.1% | 87.5% |

| order_status | Created | 5 | 5 | 100.0% | 0.0% | 3.1% | 3.1% |

| order_status | Delivered | 96478 | 14 | 0.0% | 97.0% | 8.8% | -88.3% |

Key Observations:

Missing values in the “canceled” and “created” statuses are logical.

However, 14 missing values in order_approved_dt for orders with “delivered” status are unusual.

Let’s examine these 14 delivered orders with missing order_approved_dt.

tmp_miss[lambda x: x.order_status == 'Delivered']

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5323 | e04abd8149ef81b95221e88f6ed9ab6a | 2127dc6603ac33544953ef05ec155771 | Delivered | 2017-02-18 14:40:00 | NaT | 2017-02-23 12:04:47 | 2017-03-01 13:25:33 | 2017-03-17 | 4 | Boleto | eletroportateis | SP | February | Saturday | Afternoon | Not Delayed | Delivered |

| 16567 | 8a9adc69528e1001fc68dd0aaebbb54a | 4c1ccc74e00993733742a3c786dc3c1f | Delivered | 2017-02-18 12:45:31 | NaT | 2017-02-23 09:01:52 | 2017-03-02 10:05:06 | 2017-03-21 | 5 | Boleto | construcao_ferramentas_seguranca | RS | February | Saturday | Afternoon | Not Delayed | Delivered |

| 19031 | 7013bcfc1c97fe719a7b5e05e61c12db | 2941af76d38100e0f8740a374f1a5dc3 | Delivered | 2017-02-18 13:29:47 | NaT | 2017-02-22 16:25:25 | 2017-03-01 08:07:38 | 2017-03-17 | 5 | Boleto | missed in df_products | SP | February | Saturday | Afternoon | Not Delayed | Delivered |

| 22663 | 5cf925b116421afa85ee25e99b4c34fb | 29c35fc91fc13fb5073c8f30505d860d | Delivered | 2017-02-18 16:48:35 | NaT | 2017-02-22 11:23:10 | 2017-03-09 07:28:47 | 2017-03-31 | 5 | Boleto | cool_stuff | CE | February | Saturday | Afternoon | Not Delayed | Delivered |

| 23156 | 12a95a3c06dbaec84bcfb0e2da5d228a | 1e101e0daffaddce8159d25a8e53f2b2 | Delivered | 2017-02-17 13:05:55 | NaT | 2017-02-22 11:23:11 | 2017-03-02 11:09:19 | 2017-03-20 | 5 | Boleto | cool_stuff | RJ | February | Friday | Afternoon | Not Delayed | Delivered |

| 26800 | c1d4211b3dae76144deccd6c74144a88 | 684cb238dc5b5d6366244e0e0776b450 | Delivered | 2017-01-19 12:48:08 | NaT | 2017-01-25 14:56:50 | 2017-01-30 18:16:01 | 2017-03-01 | 4 | Boleto | esporte_lazer | SP | January | Thursday | Afternoon | Not Delayed | Delivered |

| 38290 | d69e5d356402adc8cf17e08b5033acfb | 68d081753ad4fe22fc4d410a9eb1ca01 | Delivered | 2017-02-19 01:28:47 | NaT | 2017-02-23 03:11:48 | 2017-03-02 03:41:58 | 2017-03-27 | 5 | Boleto | moveis_decoracao | SP | February | Sunday | Night | Not Delayed | Delivered |

| 39334 | d77031d6a3c8a52f019764e68f211c69 | 0bf35cac6cc7327065da879e2d90fae8 | Delivered | 2017-02-18 11:04:19 | NaT | 2017-02-23 07:23:36 | 2017-03-02 16:15:23 | 2017-03-22 | 5 | Boleto | esporte_lazer | SP | February | Saturday | Morning | Not Delayed | Delivered |

| 48401 | 7002a78c79c519ac54022d4f8a65e6e8 | d5de688c321096d15508faae67a27051 | Delivered | 2017-01-19 22:26:59 | NaT | 2017-01-27 11:08:05 | 2017-02-06 14:22:19 | 2017-03-16 | 2 | Boleto | moveis_decoracao | MG | January | Thursday | Evening | Not Delayed | Delivered |

| 61743 | 2eecb0d85f281280f79fa00f9cec1a95 | a3d3c38e58b9d2dfb9207cab690b6310 | Delivered | 2017-02-17 17:21:55 | NaT | 2017-02-22 11:42:51 | 2017-03-03 12:16:03 | 2017-03-20 | 5 | Boleto | ferramentas_jardim | MG | February | Friday | Evening | Not Delayed | Delivered |

| 63052 | 51eb2eebd5d76a24625b31c33dd41449 | 07a2a7e0f63fd8cb757ed77d4245623c | Delivered | 2017-02-18 15:52:27 | NaT | 2017-02-23 03:09:14 | 2017-03-07 13:57:47 | 2017-03-29 | 5 | Boleto | moveis_decoracao | MG | February | Saturday | Afternoon | Not Delayed | Delivered |

| 67697 | 88083e8f64d95b932164187484d90212 | f67cd1a215aae2a1074638bbd35a223a | Delivered | 2017-02-18 22:49:19 | NaT | 2017-02-22 11:31:06 | 2017-03-02 12:06:06 | 2017-03-21 | 4 | Boleto | telefonia | RJ | February | Saturday | Evening | Not Delayed | Delivered |

| 72407 | 3c0b8706b065f9919d0505d3b3343881 | d85919cb3c0529589c6fa617f5f43281 | Delivered | 2017-02-17 15:53:27 | NaT | 2017-02-22 11:31:30 | 2017-03-03 11:47:47 | 2017-03-23 | 3 | Boleto | cama_mesa_banho | RS | February | Friday | Afternoon | Not Delayed | Delivered |

| 84999 | 2babbb4b15e6d2dfe95e2de765c97bce | 74bebaf46603f9340e3b50c6b086f992 | Delivered | 2017-02-18 17:15:03 | NaT | 2017-02-22 11:23:11 | 2017-03-03 18:43:43 | 2017-03-31 | 4 | Boleto | cool_stuff | MA | February | Saturday | Evening | Not Delayed | Delivered |

Key Observations:

All delivered orders with missing order_approved_dt used “boleto” as the payment method. This may be a characteristic of “boleto” usage.

All these orders were placed in January and February 2017. There may have been a system issue where approval timestamps were not saved.

Let’s examine the 5 created orders that have missing values in the payment approval time.

Let’s look at 5 “created” orders with missing payment approval timestamps.

tmp_miss[lambda x: x.order_status == 'Created']

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 7434 | b5359909123fa03c50bdb0cfed07f098 | 438449d4af8980d107bf04571413a8e7 | Created | 2017-12-05 01:07:52 | NaT | NaT | NaT | 2018-01-11 | 1 | Credit Card | Missing in Items | SP | December | Tuesday | Night | Missing delivery dt | Not Delivered |

| 9238 | dba5062fbda3af4fb6c33b1e040ca38f | 964a6df3d9bdf60fe3e7b8bb69ed893a | Created | 2018-02-09 17:21:04 | NaT | NaT | NaT | 2018-03-07 | 1 | Boleto | Missing in Items | DF | February | Friday | Evening | Missing delivery dt | Not Delivered |

| 21441 | 7a4df5d8cff4090e541401a20a22bb80 | 725e9c75605414b21fd8c8d5a1c2f1d6 | Created | 2017-11-25 11:10:33 | NaT | NaT | NaT | 2017-12-12 | 1 | Boleto | Missing in Items | RJ | November | Saturday | Morning | Missing delivery dt | Not Delivered |

| 55086 | 35de4050331c6c644cddc86f4f2d0d64 | 4ee64f4bfc542546f422da0aeb462853 | Created | 2017-12-05 01:07:58 | NaT | NaT | NaT | 2018-01-08 | 1 | Credit Card | Missing in Items | RS | December | Tuesday | Night | Missing delivery dt | Not Delivered |

| 58958 | 90ab3e7d52544ec7bc3363c82689965f | 7d61b9f4f216052ba664f22e9c504ef1 | Created | 2017-11-06 13:12:34 | NaT | NaT | NaT | 2017-12-01 | 5 | Credit Card | Missing in Items | PR | November | Monday | Afternoon | Missing delivery dt | Not Delivered |

Key Observations:

Orders with “created” status and missing payment approval timestamps were placed long ago and never delivered. The data may not have been processed.

Let’s analyze by average order review score.

df_orders['order_approved_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='tmp_avg_reviews_score'

)

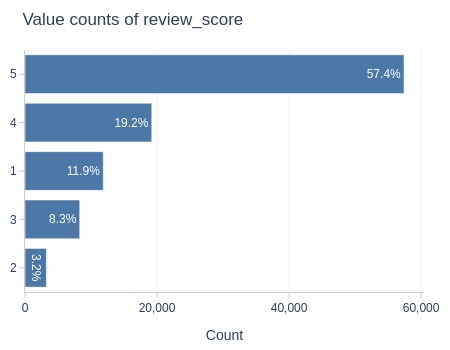

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 67 | 0.6% | 11.8% | 41.9% | 30.1% |

| tmp_avg_reviews_score | 2 | 3244 | 16 | 0.5% | 3.3% | 10.0% | 6.7% |

| tmp_avg_reviews_score | 3 | 8268 | 18 | 0.2% | 8.3% | 11.2% | 2.9% |

| tmp_avg_reviews_score | 4 | 19129 | 16 | 0.1% | 19.2% | 10.0% | -9.2% |

| tmp_avg_reviews_score | 5 | 57044 | 43 | 0.1% | 57.4% | 26.9% | -30.5% |

Key Observations:

The difference in proportions is significantly higher for score 1. These orders were likely not delivered.

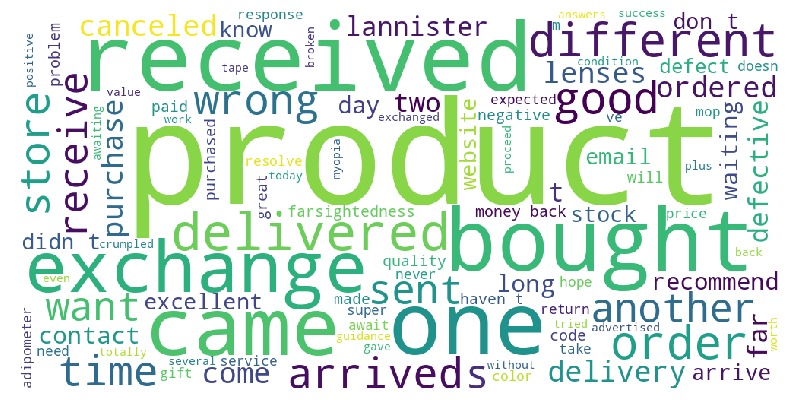

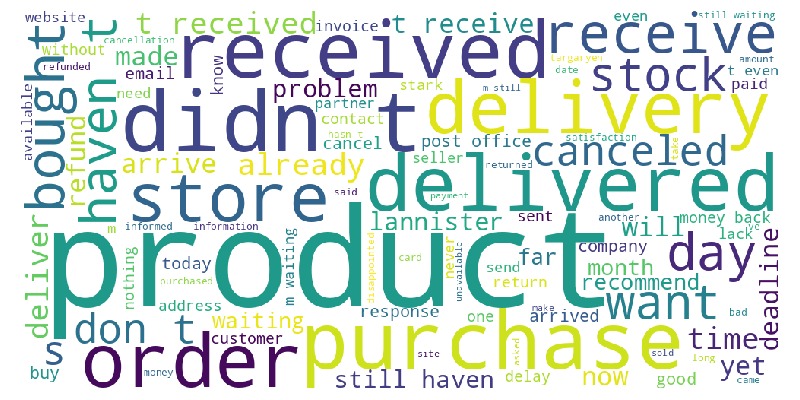

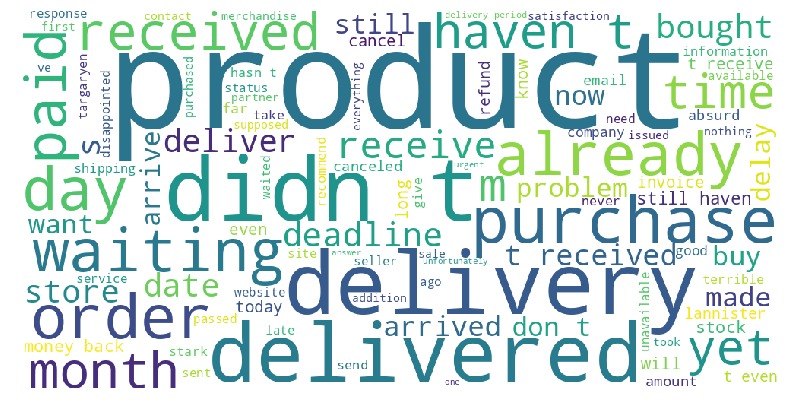

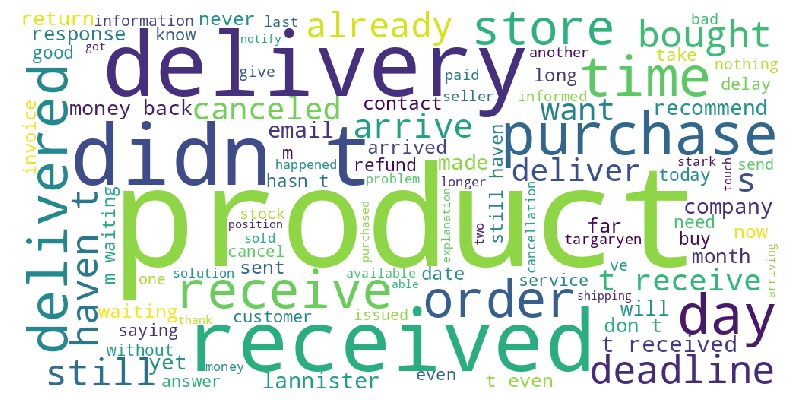

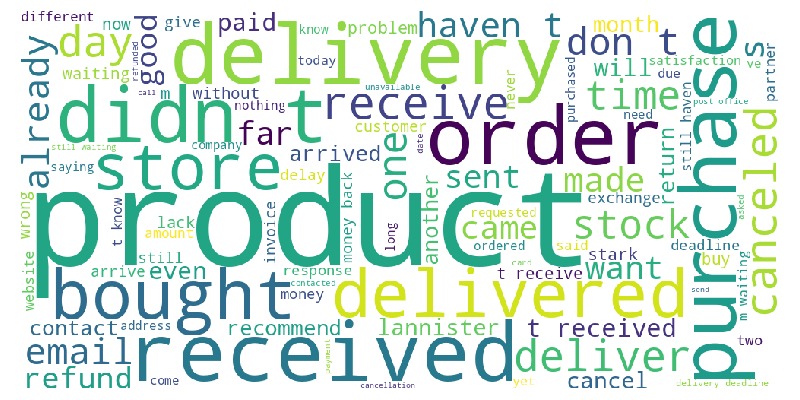

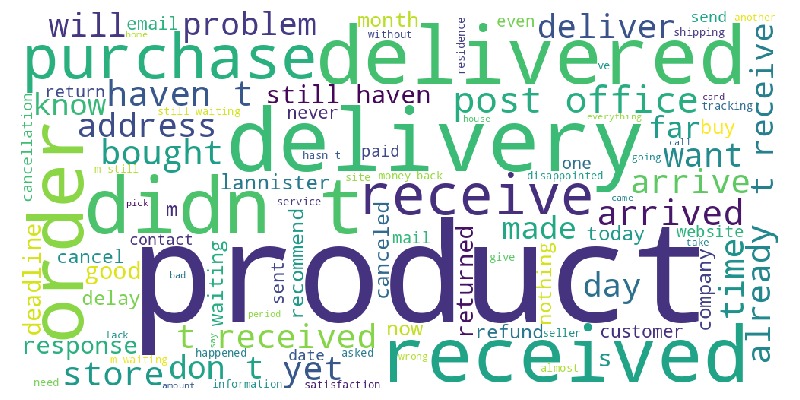

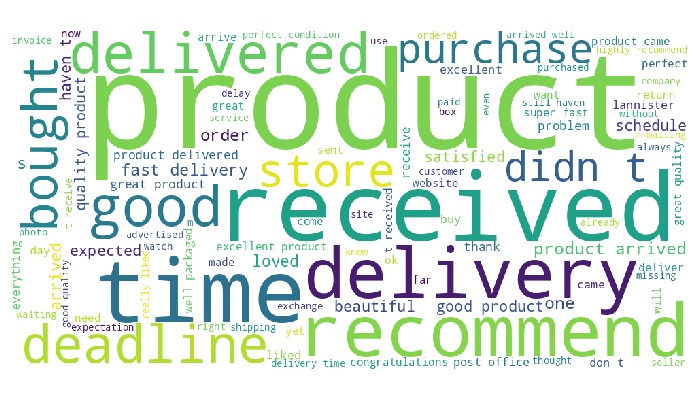

Let’s examine a word cloud from review messages.

tmp_miss = tmp_miss.merge(df_reviews, on='order_id', how='left')

tmp_miss.viz.wordcloud('review_comment_message')

Key Observations:

Many words relate to delivery.

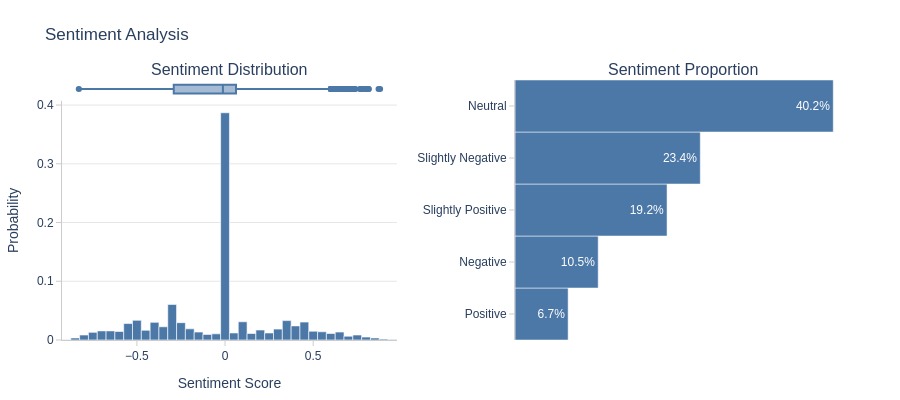

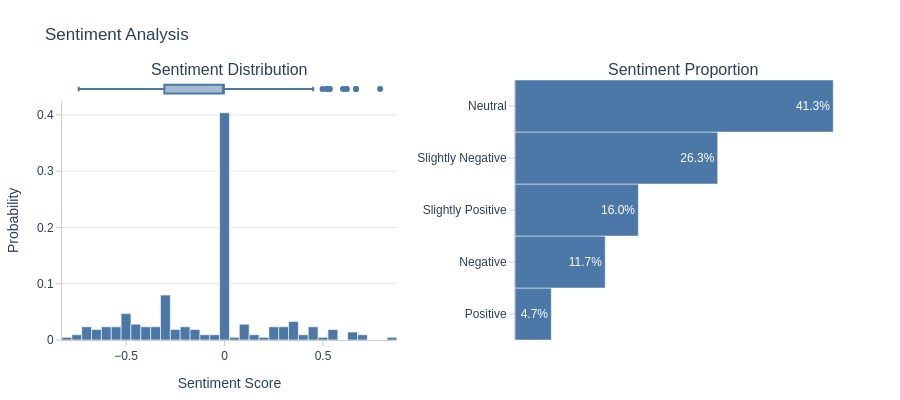

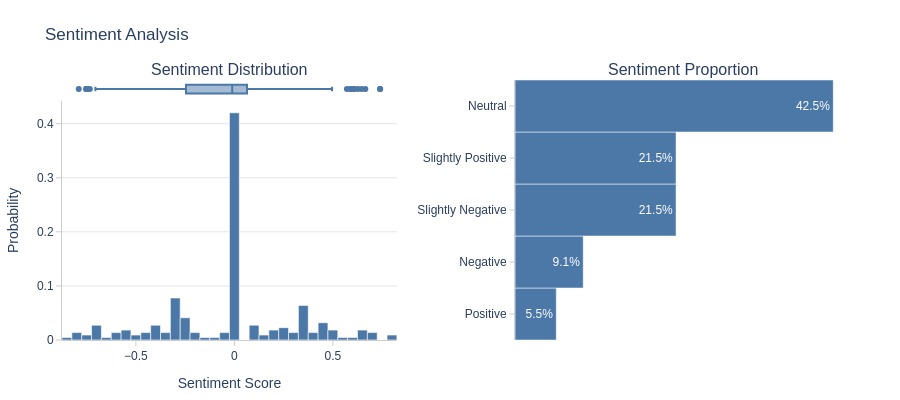

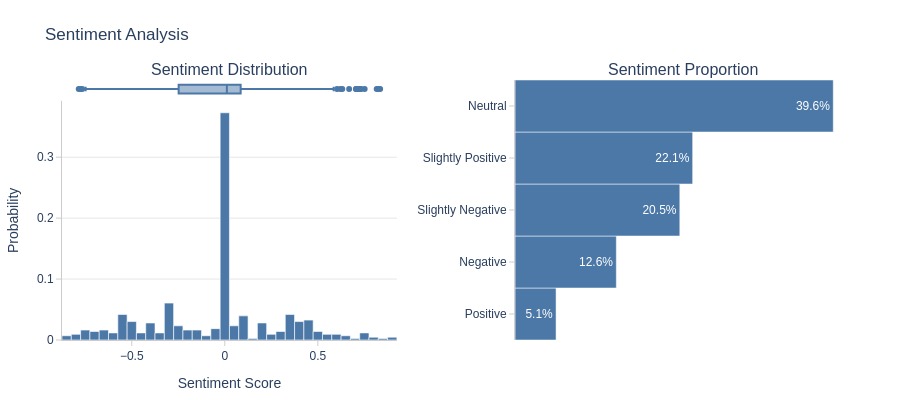

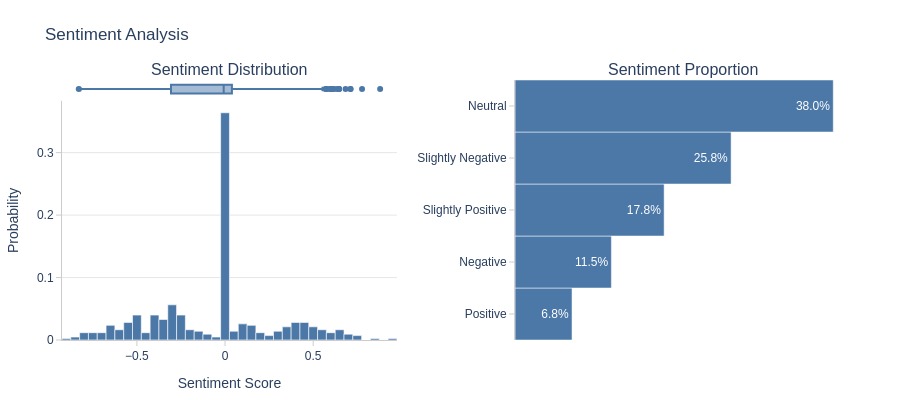

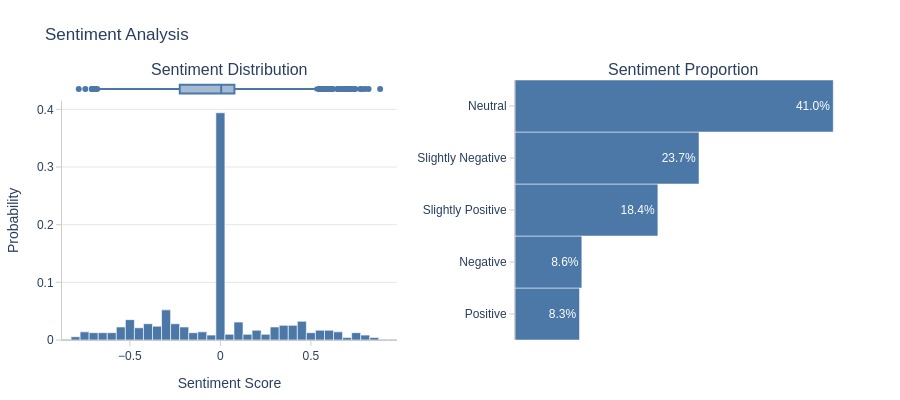

Let’s analyze the sentiment of the text.

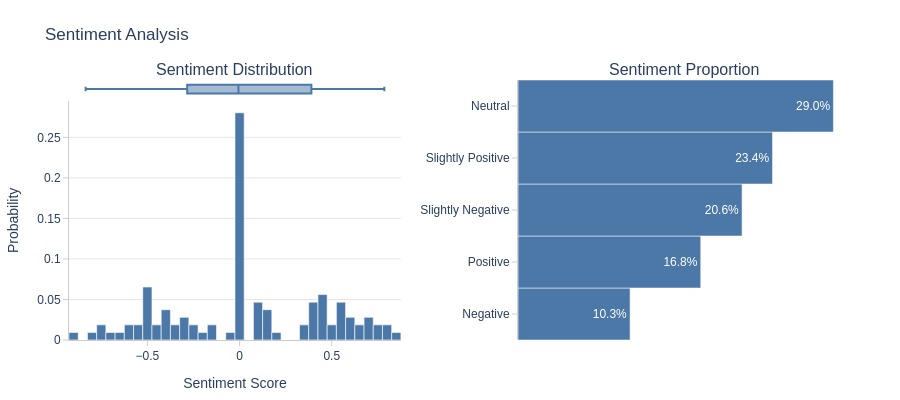

tmp_miss.analysis.sentiment('review_comment_message')

Key Observations:

The sentiment is not predominantly negative.

Let’s randomly sample 20 review comments. We’ll repeat this several times.

messages = (

tmp_miss['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['contact lenses are positive for farsightedness or negative for myopia i have farsightedness i need plus lenses negative lenses were delivered no one answers me to resolve',

'product did not come as purchased',

'the product i ordered came in a number different from the one i ordered',

'the product arrived at me diverging from what was reported on the website about how it works missing speed reducer button',

'contact lenses are positive for farsightedness or negative for myopia i have farsightedness i need plus lenses negative lenses were delivered no one answers me to resolve',

'although the product was out of stock the company made contact to notify and canceled the order',

'more than 30 days after the purchase was made i received an email stating that the product i purchased was no longer available simply a disregard for the consumer and a regrettable service',

'excellent',

'i believe the order has been cancelled',

'they exchanged my product they sent me someone else s product',

'delivery of the product different from the one ordered i await guidance for the exchange',

'congratulations',

'i did not receive the product it was canceled on request before billing',

'i always buy from lannister stores and i ve never had any problems i think it s safe',

'product was supposed to arrive on the 04/01th and until today nothing a huge amount of nonsense i want urgent provisions to be taken',

'i m waiting could you send a confirmation if it will be delivered',

'easy to assemble',

'product different than advertised',

'i am super disappointed the product came before the deadline but it has come wrong and so far i could not exchange or cancel the purchase',

'and remold is not reliable']

Key Observations:

Based on review messages, many orders were not delivered, but a significant number were delivered.

Therefore, missing payment approval timestamps cannot be assumed to indicate order cancellation.

Let’s analyze by payment type.

df_orders['order_approved_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='tmp_payment_types'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_payment_types | Voucher | 1621 | 71 | 4.4% | 1.6% | 44.4% | 42.7% |

| tmp_payment_types | Not Defined | 3 | 3 | 100.0% | 0.0% | 1.9% | 1.9% |

| tmp_payment_types | Voucher, Credit Card | 1118 | 1 | 0.1% | 1.1% | 0.6% | -0.5% |

| tmp_payment_types | Credit Card, Voucher | 1127 | 1 | 0.1% | 1.1% | 0.6% | -0.5% |

| tmp_payment_types | Boleto | 19784 | 30 | 0.2% | 19.9% | 18.8% | -1.1% |

| tmp_payment_types | Credit Card | 74259 | 54 | 0.1% | 74.7% | 33.8% | -40.9% |

Key Observations:

The proportion of “voucher” payments in missing values has increased significantly. This payment type is notably more frequent in missing values.

The “voucher” payment type has a stronger correlation with missing payment approval timestamps. This is likely a characteristic of this payment method.

Let’s analyze by month.

df_orders['order_approved_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='tmp_order_purchase_month'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_order_purchase_month | August | 10843 | 59 | 0.5% | 10.9% | 36.9% | 26.0% |

| tmp_order_purchase_month | September | 4305 | 19 | 0.4% | 4.3% | 11.9% | 7.5% |

| tmp_order_purchase_month | October | 4959 | 15 | 0.3% | 5.0% | 9.4% | 4.4% |

| tmp_order_purchase_month | February | 8508 | 18 | 0.2% | 8.6% | 11.2% | 2.7% |

| tmp_order_purchase_month | December | 5674 | 7 | 0.1% | 5.7% | 4.4% | -1.3% |

| tmp_order_purchase_month | November | 7544 | 9 | 0.1% | 7.6% | 5.6% | -2.0% |

| tmp_order_purchase_month | May | 10573 | 10 | 0.1% | 10.6% | 6.2% | -4.4% |

| tmp_order_purchase_month | January | 8069 | 4 | 0.0% | 8.1% | 2.5% | -5.6% |

| tmp_order_purchase_month | July | 10318 | 6 | 0.1% | 10.4% | 3.8% | -6.6% |

| tmp_order_purchase_month | March | 9893 | 5 | 0.1% | 9.9% | 3.1% | -6.8% |

| tmp_order_purchase_month | April | 9343 | 4 | 0.0% | 9.4% | 2.5% | -6.9% |

| tmp_order_purchase_month | June | 9412 | 4 | 0.0% | 9.5% | 2.5% | -7.0% |

Key Observations:

August has a noticeably higher proportion of missing values than other months. This is also visible in the graph above.

Missing Values in order_delivered_carrier_dt

tmp_miss = df_orders[df_orders['order_delivered_carrier_dt'].isna()]

Let’s examine the distribution of missing values in the carrier handover time.

df_orders['order_delivered_carrier_dt'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, anomaly_type='missing'

, freq='W'

)

Key Observations:

In November 2017, there was a spike in orders missing carrier handover timestamps. This may be related to Black Friday.

Let’s analyze by order status.

df_orders['order_delivered_carrier_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='order_status'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| order_status | Unavailable | 609 | 609 | 100.0% | 0.6% | 34.2% | 33.5% |

| order_status | Canceled | 625 | 550 | 88.0% | 0.6% | 30.8% | 30.2% |

| order_status | Invoiced | 314 | 314 | 100.0% | 0.3% | 17.6% | 17.3% |

| order_status | Processing | 301 | 301 | 100.0% | 0.3% | 16.9% | 16.6% |

| order_status | Created | 5 | 5 | 100.0% | 0.0% | 0.3% | 0.3% |

| order_status | Approved | 2 | 2 | 100.0% | 0.0% | 0.1% | 0.1% |

| order_status | Delivered | 96478 | 2 | 0.0% | 97.0% | 0.1% | -96.9% |

Key Observations:

There are 2 delivered orders with missing order_delivered_carrier_dt.

All orders with “unavailable” status have missing order_delivered_carrier_dt.

Let’s examine these 2 delivered orders.

tmp_miss[lambda x: x.order_status == 'Delivered'].merge(df_payments, on='order_id', how='left')

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | payment_sequential | payment_type | payment_installments | payment_value | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2aa91108853cecb43c84a5dc5b277475 | afeb16c7f46396c0ed54acb45ccaaa40 | Delivered | 2017-09-29 08:52:58 | 2017-09-29 09:07:16 | NaT | 2017-11-20 19:44:47 | 2017-11-14 | 5 | Credit Card | moveis_decoracao | SP | September | Friday | Morning | Delayed | Delivered | 1 | Credit Card | 1 | 193.98 |

| 1 | 2d858f451373b04fb5c984a1cc2defaf | e08caf668d499a6d643dafd7c5cc498a | Delivered | 2017-05-25 23:22:43 | 2017-05-25 23:30:16 | NaT | NaT | 2017-06-23 | 5 | Credit Card | esporte_lazer | RS | May | Thursday | Night | Missing delivery dt | Delivered | 1 | Credit Card | 4 | 194.00 |

Key Observations:

Both orders with missing order_delivered_carrier_dt were paid via credit card.

Let’s analyze by average review score.

df_orders['order_delivered_carrier_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='tmp_avg_reviews_score'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 1350 | 11.5% | 11.8% | 75.7% | 63.9% |

| tmp_avg_reviews_score | 2 | 3244 | 133 | 4.1% | 3.3% | 7.5% | 4.2% |

| tmp_avg_reviews_score | 3 | 8268 | 107 | 1.3% | 8.3% | 6.0% | -2.3% |

| tmp_avg_reviews_score | 4 | 19129 | 64 | 0.3% | 19.2% | 3.6% | -15.6% |

| tmp_avg_reviews_score | 5 | 57044 | 129 | 0.2% | 57.4% | 7.2% | -50.1% |

Key Observations:

The difference in proportions is significantly higher for score 1. These orders were likely not delivered.

Review score 1 has the strongest correlation with missing carrier handover timestamps. This suggests these orders were not delivered, and customers were highly dissatisfied.

Let’s examine a word cloud from review messages.

tmp_miss = tmp_miss.merge(df_reviews, on='order_id', how='left')

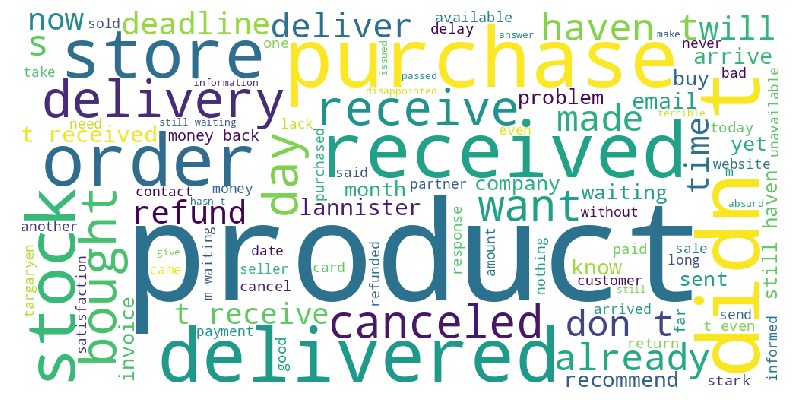

tmp_miss.viz.wordcloud('review_comment_message')

Key Observations:

Many words relate to delivery.

Let’s analyze the sentiment of the text.

tmp_miss.analysis.sentiment('review_comment_message')

Key Observations:

Negative reviews outnumber positive ones, and the boxplot body lies mostly below 0.

Let’s randomly sample 20 review comments. We’ll repeat this several times.

messages = (

tmp_miss['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['the supplier got in touch but did not provide any solution or delivery time it was not responsible for the delay it blamed a third party i just bought it and i want to receive it',

'until now i have not received my product i would like an opinion on delivery',

'product not delivered',

'i have not received the product because the seller cannot invoice in the name of the company with the cnpj requested purchase cancellation',

'the company doesn t have the product to deliver and canceled the purchase but the charge came on my credit card statement and they didn t even give a satisfaction about the reversal of the charge',

'i bought a product that was not available i suggest that you review the site every hour to avoid this type of inconvenience',

'no communication was passed to me',

'i bought the charger thinking of taking it on a trip i trusted the delivery time but i was fooled because the product has not yet arrived i requested the cancellation of the purchase disappointing',

'i have not received the product',

'dear; to date the product has not been delivered to me i would like to know what steps lannisters com will take so that i don t end up at a loss it was my 1st purchase to test the store',

'top product came on time',

'i bought it and until today it has not been delivered',

'i didn t receive the product',

'not at all satisfied because we bought the products they did not arrive on the expected date we are very upset with the site we need an urgent response from you',

'it took too long to inform that the product didn t have it in stock',

'the seller did not have the ordered product canceled',

'the product has not been delivered',

'it s been a month since i bought it and so far i haven t received all the merchandise purchased',

'product unavailable after purchase approval i would not recommend a partner i was very dissatisfied',

'the purchase was canceled without me asking']

Key Observations:

Based on review messages, many orders were not delivered, but a significant number were delivered.

Orders with missing carrier handover timestamps were more frequently undelivered compared to those with missing payment approval timestamps.

Some products may have been out of stock, and sellers did not hand them over to carriers.

However, since many orders were still delivered, missing values cannot be assumed to indicate order cancellation.

Let’s analyze by customer state.

df_orders['order_delivered_carrier_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, include_columns='tmp_customer_state'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_customer_state | SP | 41746 | 888 | 2.1% | 42.0% | 49.8% | 7.8% |

| tmp_customer_state | RO | 253 | 10 | 4.0% | 0.3% | 0.6% | 0.3% |

| tmp_customer_state | PI | 495 | 11 | 2.2% | 0.5% | 0.6% | 0.1% |

| tmp_customer_state | PR | 5045 | 91 | 1.8% | 5.1% | 5.1% | 0.0% |

| tmp_customer_state | RR | 46 | 1 | 2.2% | 0.0% | 0.1% | 0.0% |

| tmp_customer_state | AP | 68 | 1 | 1.5% | 0.1% | 0.1% | -0.0% |

| tmp_customer_state | SE | 350 | 6 | 1.7% | 0.4% | 0.3% | -0.0% |

| tmp_customer_state | AL | 413 | 7 | 1.7% | 0.4% | 0.4% | -0.0% |

| tmp_customer_state | MA | 747 | 12 | 1.6% | 0.8% | 0.7% | -0.1% |

| tmp_customer_state | PB | 536 | 8 | 1.5% | 0.5% | 0.4% | -0.1% |

| tmp_customer_state | AM | 148 | 1 | 0.7% | 0.1% | 0.1% | -0.1% |

| tmp_customer_state | MS | 715 | 11 | 1.5% | 0.7% | 0.6% | -0.1% |

| tmp_customer_state | TO | 280 | 2 | 0.7% | 0.3% | 0.1% | -0.2% |

| tmp_customer_state | RN | 485 | 4 | 0.8% | 0.5% | 0.2% | -0.3% |

| tmp_customer_state | SC | 3637 | 60 | 1.6% | 3.7% | 3.4% | -0.3% |

| tmp_customer_state | GO | 2020 | 31 | 1.5% | 2.0% | 1.7% | -0.3% |

| tmp_customer_state | MG | 11635 | 203 | 1.7% | 11.7% | 11.4% | -0.3% |

| tmp_customer_state | CE | 1336 | 18 | 1.3% | 1.3% | 1.0% | -0.3% |

| tmp_customer_state | PE | 1652 | 23 | 1.4% | 1.7% | 1.3% | -0.4% |

| tmp_customer_state | BA | 3380 | 53 | 1.6% | 3.4% | 3.0% | -0.4% |

| tmp_customer_state | DF | 2140 | 30 | 1.4% | 2.2% | 1.7% | -0.5% |

| tmp_customer_state | PA | 975 | 9 | 0.9% | 1.0% | 0.5% | -0.5% |

| tmp_customer_state | MT | 907 | 6 | 0.7% | 0.9% | 0.3% | -0.6% |

| tmp_customer_state | RS | 5466 | 84 | 1.5% | 5.5% | 4.7% | -0.8% |

| tmp_customer_state | ES | 2033 | 19 | 0.9% | 2.0% | 1.1% | -1.0% |

| tmp_customer_state | RJ | 12852 | 194 | 1.5% | 12.9% | 10.9% | -2.0% |

Key Observations:

The difference in proportions is slightly higher in São Paulo compared to other states.

Missing Values in order_delivered_customer_dt

tmp_miss = df_orders[df_orders['order_delivered_customer_dt'].isna()]

Let’s examine the distribution of missing values in customer delivery time.

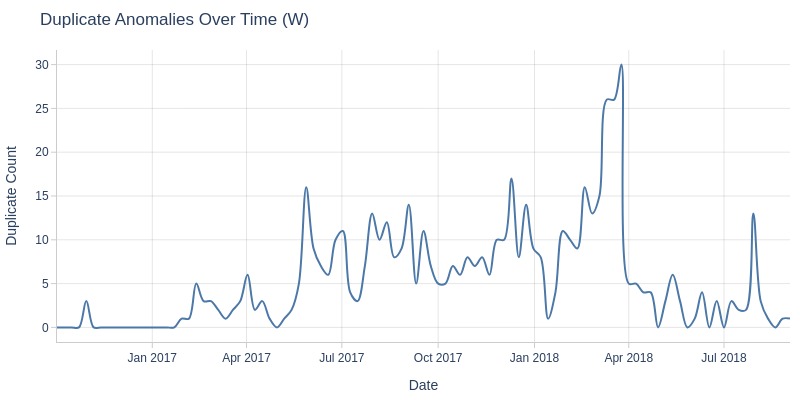

df_orders['order_delivered_customer_dt'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, anomaly_type='missing'

, freq='W'

)

Key Observations:

In November 2017, there was a spike in orders missing customer delivery timestamps.

Let’s analyze by order status.

df_orders['order_delivered_customer_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='order_status'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| order_status | Shipped | 1107 | 1107 | 100.0% | 1.1% | 37.3% | 36.2% |

| order_status | Canceled | 625 | 619 | 99.0% | 0.6% | 20.9% | 20.2% |

| order_status | Unavailable | 609 | 609 | 100.0% | 0.6% | 20.5% | 19.9% |

| order_status | Invoiced | 314 | 314 | 100.0% | 0.3% | 10.6% | 10.3% |

| order_status | Processing | 301 | 301 | 100.0% | 0.3% | 10.2% | 9.8% |

| order_status | Created | 5 | 5 | 100.0% | 0.0% | 0.2% | 0.2% |

| order_status | Approved | 2 | 2 | 100.0% | 0.0% | 0.1% | 0.1% |

| order_status | Delivered | 96478 | 8 | 0.0% | 97.0% | 0.3% | -96.8% |

Key Observations:

There are 8 orders with “delivered” status but missing delivery timestamps.

Let’s analyze by customer state.

df_orders['order_delivered_customer_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, include_columns='tmp_customer_state'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_customer_state | RJ | 12852 | 499 | 3.9% | 12.9% | 16.8% | 3.9% |

| tmp_customer_state | BA | 3380 | 124 | 3.7% | 3.4% | 4.2% | 0.8% |

| tmp_customer_state | CE | 1336 | 57 | 4.3% | 1.3% | 1.9% | 0.6% |

| tmp_customer_state | PE | 1652 | 59 | 3.6% | 1.7% | 2.0% | 0.3% |

| tmp_customer_state | MA | 747 | 30 | 4.0% | 0.8% | 1.0% | 0.3% |

| tmp_customer_state | SP | 41746 | 1251 | 3.0% | 42.0% | 42.2% | 0.2% |

| tmp_customer_state | SE | 350 | 15 | 4.3% | 0.4% | 0.5% | 0.2% |

| tmp_customer_state | PI | 495 | 19 | 3.8% | 0.5% | 0.6% | 0.1% |

| tmp_customer_state | AL | 413 | 16 | 3.9% | 0.4% | 0.5% | 0.1% |

| tmp_customer_state | RR | 46 | 5 | 10.9% | 0.0% | 0.2% | 0.1% |

| tmp_customer_state | PB | 536 | 19 | 3.5% | 0.5% | 0.6% | 0.1% |

| tmp_customer_state | GO | 2020 | 63 | 3.1% | 2.0% | 2.1% | 0.1% |

| tmp_customer_state | RO | 253 | 10 | 4.0% | 0.3% | 0.3% | 0.1% |

| tmp_customer_state | PA | 975 | 29 | 3.0% | 1.0% | 1.0% | -0.0% |

| tmp_customer_state | AP | 68 | 1 | 1.5% | 0.1% | 0.0% | -0.0% |

| tmp_customer_state | AM | 148 | 3 | 2.0% | 0.1% | 0.1% | -0.0% |

| tmp_customer_state | AC | 81 | 1 | 1.2% | 0.1% | 0.0% | -0.0% |

| tmp_customer_state | TO | 280 | 6 | 2.1% | 0.3% | 0.2% | -0.1% |

| tmp_customer_state | RN | 485 | 11 | 2.3% | 0.5% | 0.4% | -0.1% |

| tmp_customer_state | DF | 2140 | 60 | 2.8% | 2.2% | 2.0% | -0.1% |

| tmp_customer_state | MT | 907 | 21 | 2.3% | 0.9% | 0.7% | -0.2% |

| tmp_customer_state | MS | 715 | 14 | 2.0% | 0.7% | 0.5% | -0.2% |

| tmp_customer_state | SC | 3637 | 90 | 2.5% | 3.7% | 3.0% | -0.6% |

| tmp_customer_state | ES | 2033 | 38 | 1.9% | 2.0% | 1.3% | -0.8% |

| tmp_customer_state | PR | 5045 | 122 | 2.4% | 5.1% | 4.1% | -1.0% |

| tmp_customer_state | RS | 5466 | 122 | 2.2% | 5.5% | 4.1% | -1.4% |

| tmp_customer_state | MG | 11635 | 280 | 2.4% | 11.7% | 9.4% | -2.3% |

Key Observations:

The difference in proportions is slightly higher in Rio de Janeiro.

Let’s examine these 8 delivered orders.

tmp_miss[lambda x: x.order_status == 'Delivered'].merge(df_payments, on='order_id', how='left')

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | payment_sequential | payment_type | payment_installments | payment_value | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2d1e2d5bf4dc7227b3bfebb81328c15f | ec05a6d8558c6455f0cbbd8a420ad34f | Delivered | 2017-11-28 17:44:07 | 2017-11-28 17:56:40 | 2017-11-30 18:12:23 | NaT | 2017-12-18 | 5 | Credit Card | automotivo | SP | November | Tuesday | Evening | Missing delivery dt | Delivered | 1 | Credit Card | 3 | 134.83 |

| 1 | f5dd62b788049ad9fc0526e3ad11a097 | 5e89028e024b381dc84a13a3570decb4 | Delivered | 2018-06-20 06:58:43 | 2018-06-20 07:19:05 | 2018-06-25 08:05:00 | NaT | 2018-07-16 | 5 | Debit Card | industria_comercio_e_negocios | SP | June | Wednesday | Morning | Missing delivery dt | Delivered | 1 | Debit Card | 1 | 354.24 |

| 2 | 2ebdfc4f15f23b91474edf87475f108e | 29f0540231702fda0cfdee0a310f11aa | Delivered | 2018-07-01 17:05:11 | 2018-07-01 17:15:12 | 2018-07-03 13:57:00 | NaT | 2018-07-30 | 5 | Credit Card | relogios_presentes | SP | July | Sunday | Evening | Missing delivery dt | Delivered | 1 | Credit Card | 3 | 158.07 |

| 3 | e69f75a717d64fc5ecdfae42b2e8e086 | cfda40ca8dd0a5d486a9635b611b398a | Delivered | 2018-07-01 22:05:55 | 2018-07-01 22:15:14 | 2018-07-03 13:57:00 | NaT | 2018-07-30 | 5 | Credit Card | relogios_presentes | SP | July | Sunday | Evening | Missing delivery dt | Delivered | 1 | Credit Card | 1 | 158.07 |

| 4 | 0d3268bad9b086af767785e3f0fc0133 | 4f1d63d35fb7c8999853b2699f5c7649 | Delivered | 2018-07-01 21:14:02 | 2018-07-01 21:29:54 | 2018-07-03 09:28:00 | NaT | 2018-07-24 | 5 | Credit Card | brinquedos | SP | July | Sunday | Evening | Missing delivery dt | Delivered | 1 | Credit Card | 4 | 204.62 |

| 5 | 2d858f451373b04fb5c984a1cc2defaf | e08caf668d499a6d643dafd7c5cc498a | Delivered | 2017-05-25 23:22:43 | 2017-05-25 23:30:16 | NaT | NaT | 2017-06-23 | 5 | Credit Card | esporte_lazer | RS | May | Thursday | Night | Missing delivery dt | Delivered | 1 | Credit Card | 4 | 194.00 |

| 6 | ab7c89dc1bf4a1ead9d6ec1ec8968a84 | dd1b84a7286eb4524d52af4256c0ba24 | Delivered | 2018-06-08 12:09:39 | 2018-06-08 12:36:39 | 2018-06-12 14:10:00 | NaT | 2018-06-26 | 1 | Credit Card | informatica_acessorios | SP | June | Friday | Afternoon | Missing delivery dt | Delivered | 1 | Credit Card | 5 | 120.12 |

| 7 | 20edc82cf5400ce95e1afacc25798b31 | 28c37425f1127d887d7337f284080a0f | Delivered | 2018-06-27 16:09:12 | 2018-06-27 16:29:30 | 2018-07-03 19:26:00 | NaT | 2018-07-19 | 5 | Credit Card | livros_interesse_geral | SP | June | Wednesday | Afternoon | Missing delivery dt | Delivered | 1 | Credit Card | 1 | 54.97 |

Key Observations:

7 out of 8 orders with missing order_delivered_customer_dt were paid via credit card, and 1 was paid via debit card.

Let’s analyze by average review score.

df_orders['order_delivered_customer_dt'].explore.anomalies_by_categories(

anomaly_type='missing'

, pct_diff_threshold=-100

, include_columns='tmp_avg_reviews_score'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 2086 | 17.7% | 11.8% | 70.4% | 58.5% |

| tmp_avg_reviews_score | 2 | 3244 | 223 | 6.9% | 3.3% | 7.5% | 4.3% |

| tmp_avg_reviews_score | 3 | 8268 | 235 | 2.8% | 8.3% | 7.9% | -0.4% |

| tmp_avg_reviews_score | 4 | 19129 | 156 | 0.8% | 19.2% | 5.3% | -14.0% |

| tmp_avg_reviews_score | 5 | 57044 | 265 | 0.5% | 57.4% | 8.9% | -48.4% |

Key Observations:

The difference in proportions is significantly higher for score 1. These orders were likely not delivered.

Let’s examine a word cloud from review messages.

tmp_miss = tmp_miss.merge(df_reviews, on='order_id', how='left')

tmp_miss.viz.wordcloud('review_comment_message')

Key Observations:

Many words relate to delivery.

Let’s analyze the sentiment of the text.

tmp_miss.analysis.sentiment('review_comment_message')

Key Observations:

Negative reviews outnumber positive ones, and the boxplot body lies mostly below 0.

Let’s randomly sample 20 review comments.

We’ll repeat this several times.

messages = (

tmp_miss['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['good afternoon to date i still have not received my purchase i tried to contact you and i couldn t i want the amount paid to be refunded or the merchandise urgently awaiting return',

'good morning i received my order on time but the pushing ball came dry and peeling how should i proceed to receive one in good condition awaiting vanessa',

'total neglect of the store does not receive the product and they did not contact me a long time later they sent an email to my sister and did not even leave a phone number for me to contact the store i do not recommend',

'until now i have not received my product i would like an opinion on delivery',

'i am disappointed with delay in delivery and the lack of satisfaction or explanation of the store',

'i didn t receive the product',

'i m waiting until today the product has already completed 30 days of purchase',

'they don t deliver bad store i do not recommend i want my money back',

'i have not received the product yet please help',

'they exchanged my product they sent me someone else s product',

'i do not recommend buying from this site',

'the product arrived at me diverging from what was reported on the website about how it works missing speed reducer button',

'i want the value back',

'product if you want it left to be delivered i had to buy it elsewhere',

'i have not received this product and no estimated arrival date',

'it seemed to be good at first but the delivery period has already expired and i have no information about the product',

'i am still waiting for the delivery of the product i ordered and it has already exceeded the expected delivery date i am very dissatisfied',

'i wait to receive the product',

'i didn t receive the product',

'seller still not send my order']

Key Observations:

Based on review messages, some orders were not delivered, but this is less frequent than with missing payment approval or carrier handover timestamps.

Many messages confirm order receipt. Thus, these orders cannot be assumed canceled.

del tmp_miss

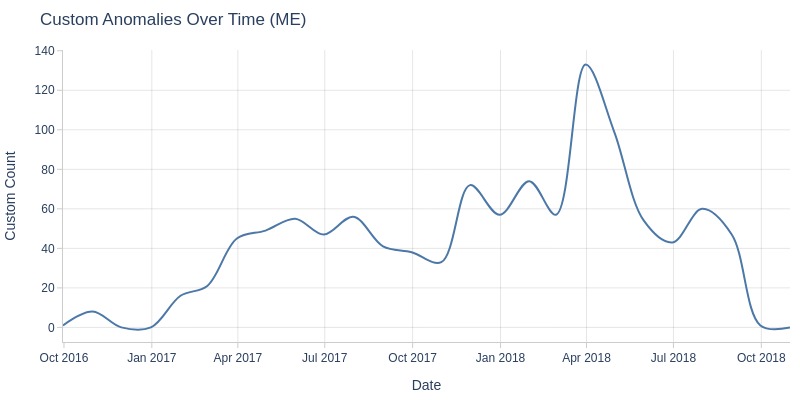

Anomalies in Order Status#

We have many orders with statuses other than “delivered.” This is unusual. Let’s investigate this.

Let’s examine by status.

df_orders.order_status.value_counts()

order_status

Delivered 96478

Shipped 1107

Canceled 625

Unavailable 609

Invoiced 314

Processing 301

Created 5

Approved 2

Name: count, dtype: int64

Let’s look at missing values in the timestamps by order status.

columns = [

"order_status",

"order_purchase_dt",

"order_approved_dt",

"order_delivered_carrier_dt",

"order_delivered_customer_dt",

"order_estimated_delivery_dt",

]

(

df_orders[columns].pivot_table(

index='order_status',

aggfunc=lambda x: x.isna().sum(),

observed=True,

)

.reset_index()

[columns]

)

| order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | |

|---|---|---|---|---|---|---|

| 0 | Approved | 0 | 0 | 2 | 2 | 0 |

| 1 | Canceled | 0 | 141 | 550 | 619 | 0 |

| 2 | Created | 0 | 5 | 5 | 5 | 0 |

| 3 | Delivered | 0 | 14 | 2 | 8 | 0 |

| 4 | Invoiced | 0 | 0 | 314 | 314 | 0 |

| 5 | Processing | 0 | 0 | 301 | 301 | 0 |

| 6 | Shipped | 0 | 0 | 0 | 1107 | 0 |

| 7 | Unavailable | 0 | 0 | 609 | 609 | 0 |

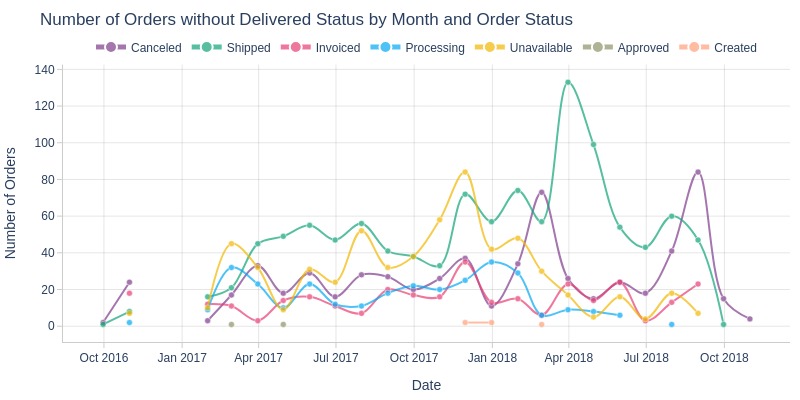

Let’s look at the number of orders without the delivered status over time.

labels = dict(

order_purchase_dt = 'Date',

order_id = 'Number of Orders',

order_status = 'Order Status',

)

df_orders[lambda x: x.order_status != 'Delivered'].viz.line(

x='order_purchase_dt',

y='order_id',

color='order_status',

agg_func='nunique',

freq='ME',

labels=labels,

markers=True,

title='Number of Orders without Delivered Status by Month and Order Status',

)

Key Observations:

In March and April 2018, there was a sharp spike in orders stuck in the “shipped” status.

In February and August 2018, there were spikes in the “canceled” status.

In November 2017, there was a spike in the “unavailable” status. This month included Black Friday.

Let’s examine each status separately.

created

Let’s look at the rows in the dataframe with orders that have the status ‘created’.

df_orders[lambda x: x.order_status == 'Created']

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 7434 | b5359909123fa03c50bdb0cfed07f098 | 438449d4af8980d107bf04571413a8e7 | Created | 2017-12-05 01:07:52 | NaT | NaT | NaT | 2018-01-11 | 1 | Credit Card | Missing in Items | SP | December | Tuesday | Night | Missing delivery dt | Not Delivered |

| 9238 | dba5062fbda3af4fb6c33b1e040ca38f | 964a6df3d9bdf60fe3e7b8bb69ed893a | Created | 2018-02-09 17:21:04 | NaT | NaT | NaT | 2018-03-07 | 1 | Boleto | Missing in Items | DF | February | Friday | Evening | Missing delivery dt | Not Delivered |

| 21441 | 7a4df5d8cff4090e541401a20a22bb80 | 725e9c75605414b21fd8c8d5a1c2f1d6 | Created | 2017-11-25 11:10:33 | NaT | NaT | NaT | 2017-12-12 | 1 | Boleto | Missing in Items | RJ | November | Saturday | Morning | Missing delivery dt | Not Delivered |

| 55086 | 35de4050331c6c644cddc86f4f2d0d64 | 4ee64f4bfc542546f422da0aeb462853 | Created | 2017-12-05 01:07:58 | NaT | NaT | NaT | 2018-01-08 | 1 | Credit Card | Missing in Items | RS | December | Tuesday | Night | Missing delivery dt | Not Delivered |

| 58958 | 90ab3e7d52544ec7bc3363c82689965f | 7d61b9f4f216052ba664f22e9c504ef1 | Created | 2017-11-06 13:12:34 | NaT | NaT | NaT | 2017-12-01 | 5 | Credit Card | Missing in Items | PR | November | Monday | Afternoon | Missing delivery dt | Not Delivered |

Key Observations:

One order has a rating of 5, while four orders have a rating of 1.

The process stops after purchase, before payment approval.

Let’s look at the review messages.

messages = (

df_orders[lambda x: x.order_status == 'Created']

.merge(df_reviews, on='order_id', how='left')

['review_comment_message']

.tolist()

)

display(messages)

['the product has not arrived until today',

'i have not received my product i am not satisfied i am waiting to this day',

nan,

'i have been awaiting the product since december and i have not received it deceptualized with the americana because besides the delay i gave a waterway that does not fulfill the advertising delay my order',

'although the product was out of stock the company made contact to notify and canceled the order']

Key Observations:

Based on review comments, these orders were not delivered.

approved

Let’s look at the rows.

df_orders[lambda x: x.order_status == 'Approved']

| order_id | customer_id | order_status | order_purchase_dt | order_approved_dt | order_delivered_carrier_dt | order_delivered_customer_dt | order_estimated_delivery_dt | tmp_avg_reviews_score | tmp_payment_types | tmp_product_categories | tmp_customer_state | tmp_order_purchase_month | tmp_order_purchase_weekday | tmp_purchase_time_of_day | tmp_is_delayed | tmp_is_delivered | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 44897 | a2e4c44360b4a57bdff22f3a4630c173 | 8886130db0ea6e9e70ba0b03d7c0d286 | Approved | 2017-02-06 20:18:17 | 2017-02-06 20:30:19 | NaT | NaT | 2017-03-01 | 1 | Credit Card | informatica_acessorios | MG | February | Monday | Evening | Missing delivery dt | Not Delivered |

| 88457 | 132f1e724165a07f6362532bfb97486e | b2191912d8ad6eac2e4dc3b6e1459515 | Approved | 2017-04-25 01:25:34 | 2017-04-30 20:32:41 | NaT | NaT | 2017-05-22 | 4 | Credit Card | utilidades_domesticas | SP | April | Tuesday | Night | Missing delivery dt | Not Delivered |

Key Observations:

One order received a rating of 1, the other a 4.

The process stops after payment approval, before carrier handover.

Let’s look at the review messages.

messages = (

df_orders[lambda x: x.order_status == 'Approved']

.merge(df_reviews, on='order_id', how='left')

['review_comment_message']

.tolist()

)

display(messages)

[nan, nan]

Key Observations:

No comments were left for these orders.

processing

Let’s look at orders with the status ‘processing’ by month.

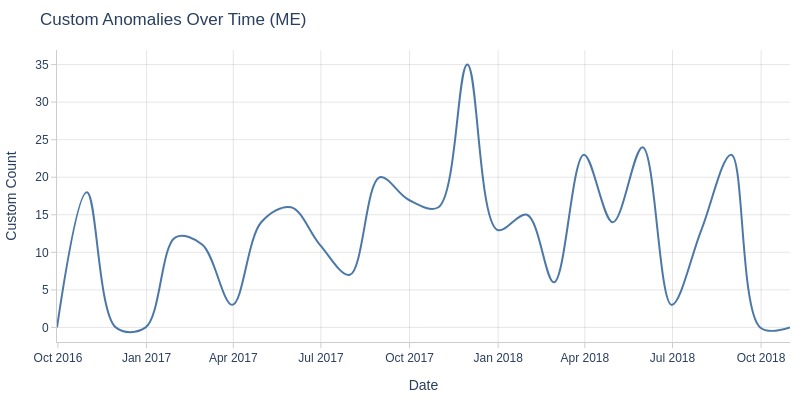

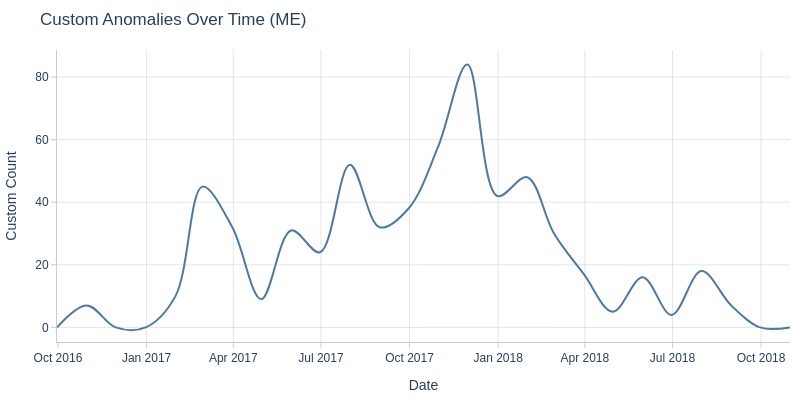

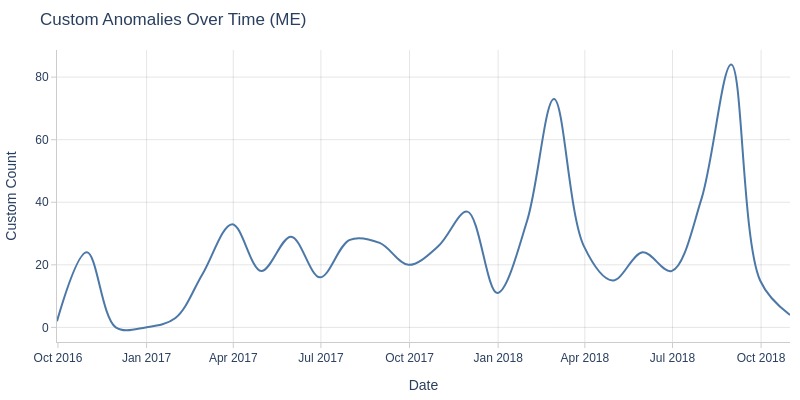

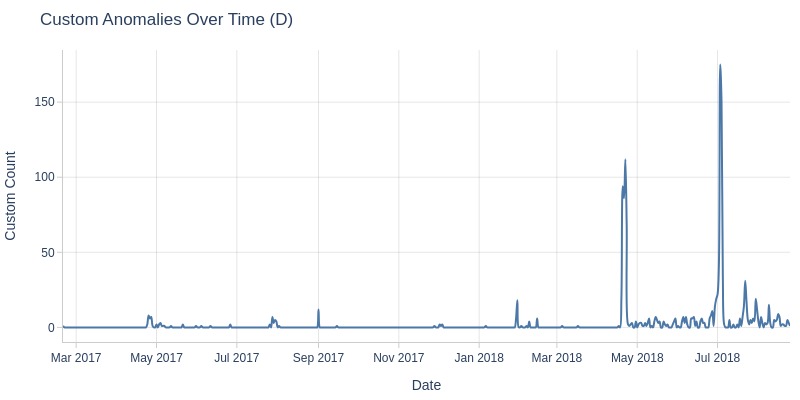

df_orders['order_status'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, custom_mask=df_orders.order_status == 'Processing'

, freq='ME'

)

Let’s look at the count of each order status.

tmp_anomal = df_orders[lambda x: x.order_status == 'Processing']

(

tmp_anomal[['order_purchase_dt', 'order_approved_dt', 'order_delivered_carrier_dt', 'order_delivered_customer_dt', 'order_estimated_delivery_dt']]

.count()

.to_frame('count')

)

| count | |

|---|---|

| order_purchase_dt | 301 |

| order_approved_dt | 301 |

| order_delivered_carrier_dt | 0 |

| order_delivered_customer_dt | 0 |

| order_estimated_delivery_dt | 301 |

Key Observations:

The process stops after payment approval, before carrier handover.

Let’s look at it broken down by the average order rating.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Processing'

, include_columns='tmp_avg_reviews_score'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 260 | 2.2% | 11.8% | 86.4% | 74.6% |

| tmp_avg_reviews_score | 2 | 3244 | 19 | 0.6% | 3.3% | 6.3% | 3.1% |

| tmp_avg_reviews_score | 3 | 8268 | 10 | 0.1% | 8.3% | 3.3% | -5.0% |

| tmp_avg_reviews_score | 4 | 19129 | 6 | 0.0% | 19.2% | 2.0% | -17.2% |

| tmp_avg_reviews_score | 5 | 57044 | 6 | 0.0% | 57.4% | 2.0% | -55.4% |

Key Observations:

86% of orders with “processing” status have a rating of 1.

6% of orders have a rating of 2.

Customers are clearly dissatisfied.

Let’s look at it broken down by payment type.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Processing'

, include_columns='tmp_payment_types'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_payment_types | Boleto | 19784 | 70 | 0.4% | 19.9% | 23.3% | 3.4% |

| tmp_payment_types | Voucher | 1621 | 7 | 0.4% | 1.6% | 2.3% | 0.7% |

| tmp_payment_types | Credit Card, Voucher | 1127 | 3 | 0.3% | 1.1% | 1.0% | -0.1% |

| tmp_payment_types | Voucher, Credit Card | 1118 | 1 | 0.1% | 1.1% | 0.3% | -0.8% |

| tmp_payment_types | Debit Card | 1527 | 2 | 0.1% | 1.5% | 0.7% | -0.9% |

| tmp_payment_types | Credit Card | 74259 | 218 | 0.3% | 74.7% | 72.4% | -2.3% |

Key Observations:

The “boleto” payment type has a slightly higher proportion difference.

Let’s randomly sample 20 review comments.

We’ll repeat this several times.

tmp_anomal = tmp_anomal.merge(df_reviews, on='order_id', how='left')

messages = (

tmp_anomal['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['i made a purchase my orders came separate so far so good but a product was missing and it has not yet been delivered and that s what i paid dearly for shipping the deadline was the 6th it s already the 8th and i m not even satisfied',

'the deadline has passed and the product has not arrived yet what happened',

'i m still waiting for my product it hasn t arrived yet',

'i always bought on this site and everything arrived correctly only in this last purchase that i had problems with the delivery of one of the products which did not arrive',

'the seller contacted me but did not complete the sale',

'i did not receive the product i wanted i am waiting for the refund the service was so bad they didn t even issue a note',

'i no longer buy from this baratheon partner for 30 days since i bought it and the invoice has not yet been issued',

'i have not received the product purchased since 12/26/17 i had no response from the store giving satisfaction about the purchase i request a refund for the purchase',

'terrible because i ordered a product i already paid it said it would arrive by 24 11 2017 and the product did not arrive on top of that it said that it was unavailable',

'i am disappointed with the late delivery',

'my product is overdue it was supposed to be delivered on 03/10',

'they did not send the product and did not give satisfaction',

'i bought it for the first time for never again deceptionedx',

'i did not receive the product they were delivering me to di 15 11 2017 and then it was for the day 25 11 2017 and nothing i am disappointed with the seller and lannister because they took the order i can not life',

'i just didn t receive the product',

'i already made purchases through baratheon and everything went well only this request presented problems and if i didn t go after it i would never know about the situation the invoice has already been paid i have not received the product and not even a satisfaction',

'i m waiting for the product to arrive',

'absurd to buy a product and it is not available',

'did not deliver',

'i buy it all the time never had a problem the shirts purchased received and returned were three dobby i am currently choosing others to use the vale']

Key Observations:

Based on review messages, orders were not delivered.

Some reviews mention items being out of stock.

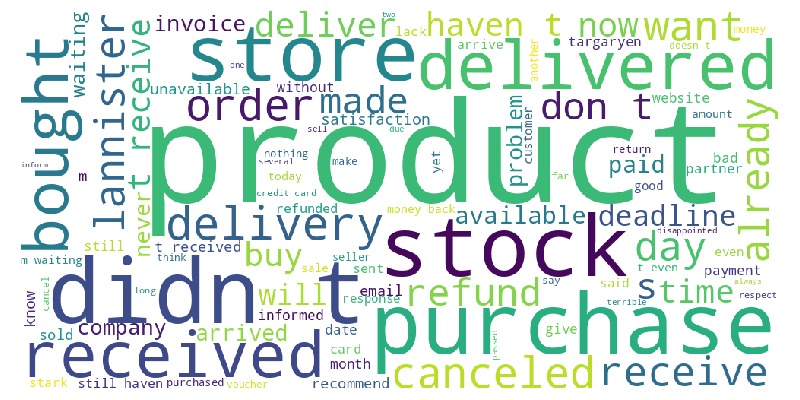

Let’s examine a word cloud from review messages.

tmp_anomal.viz.wordcloud('review_comment_message')

Key Observations:

Most words relate to delivery.

Let’s analyze the sentiment of the text.

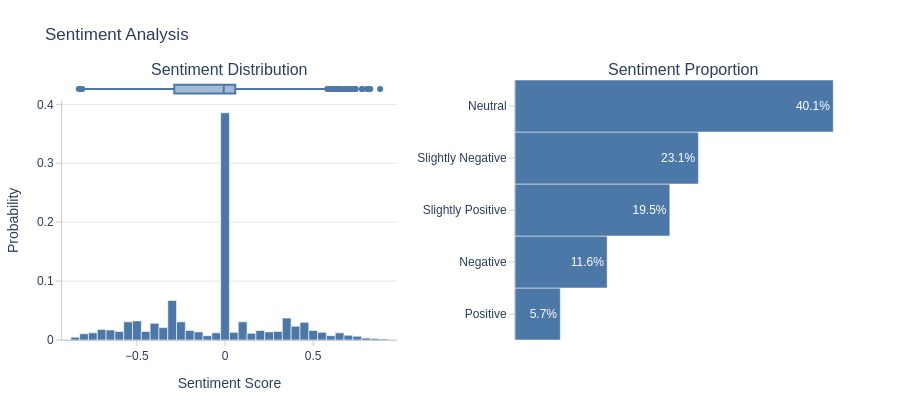

tmp_anomal.analysis.sentiment('review_comment_message')

Key Observations:

Negative reviews significantly outnumber positive ones, and the boxplot lies in the negative zone.

invoiced

Let’s look at orders with the status ‘invoiced’ by month.

df_orders['order_status'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, custom_mask=df_orders.order_status == 'Invoiced'

, freq='ME'

)

Let’s look at the count of each order status.

tmp_anomal = df_orders[lambda x: x.order_status == 'Invoiced']

(

tmp_anomal[['order_purchase_dt', 'order_approved_dt', 'order_delivered_carrier_dt', 'order_delivered_customer_dt', 'order_estimated_delivery_dt']]

.count()

.to_frame('count')

)

| count | |

|---|---|

| order_purchase_dt | 314 |

| order_approved_dt | 314 |

| order_delivered_carrier_dt | 0 |

| order_delivered_customer_dt | 0 |

| order_estimated_delivery_dt | 314 |

Key Observations:

The process stops after payment approval, before carrier handover.

Let’s look at it broken down by the average order rating.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Invoiced'

, include_columns='tmp_avg_reviews_score'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 232 | 2.0% | 11.8% | 73.9% | 62.1% |

| tmp_avg_reviews_score | 2 | 3244 | 28 | 0.9% | 3.3% | 8.9% | 5.7% |

| tmp_avg_reviews_score | 3 | 8268 | 17 | 0.2% | 8.3% | 5.4% | -2.9% |

| tmp_avg_reviews_score | 4 | 19129 | 13 | 0.1% | 19.2% | 4.1% | -15.1% |

| tmp_avg_reviews_score | 5 | 57044 | 24 | 0.0% | 57.4% | 7.6% | -49.7% |

Key Observations:

74% of orders with “invoiced” status have a rating of 1.

9% of orders have a rating of 2.

Customers are clearly dissatisfied.

Let’s randomly sample 20 review comments.

We’ll repeat this several times.

tmp_anomal = tmp_anomal.merge(df_reviews, on='order_id', how='left')

messages = (

tmp_anomal['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['my product did not arrive delivery time of 34 days and still not delivered i do not recommend',

'i bought it to give to my daughter for christmas i hope it arrives on time',

'good morning i purchased more than 30 days ago and i did not receive the product i requested a cancellation but i did not receive a response',

'a simple computer mouse shouldn t take so long to deliver',

'they gave me a huge deadline to send the product and on the last day they sent me an email saying they don t have the product now i have the bureaucracy to get my money back',

'i like shopping in this store',

'takes too long to deliver i hope the product still comes',

'terrible company did not deliver the product and does not respond to my cancellation requests i already paid the first installment and had no response',

'after a long time only today i received a message with the following information: good afternoon walter thanks for getting in touch we are sorry for the delay in getting back to you your order was not',

'unfortunately it happened in no other purchase of several that i have already made on lannister com i did not receive the product but this time i did not receive it a pity',

'it was the first and last time i buy from this company never',

'no information total sloppiness',

'expensive i requested the cancellation of this order on the same day that the invoice was issued i called the supplier informed in the nf and canceled the shipment of the goods i am waiting for an american refund form',

'i don t recommend this store because they sold me a backpack that they didn t have in stock',

'the product has not yet arrived it has passed and much of the deadline and no one gives me a return',

'i bought two white stools on the website to be delivered by 03/13 and so far i haven t received my house well i m waiting for action or i m going to look for my consumer rights',

'absurd they said that until the 10/04th serious delivery but so far nothing and it s been paid for my orders for a while',

'i have already bought several times on the lannister site;but this last time i made a purchase of a toner at 04 10 16 and only promised for 25 11 16 and still not i received the product',

'very disappointed with lannister s targaryen partner purchased product paid and not delivered a lot of disappointment please speed up delivery or want my money back',

'the product order was placed on 07/11 with delivery forecast for the 21st the invoice was only issued on the 22nd; it s already the 24th and the product hasn t even left for delivery']

Key Observations:

Review messages indicate orders were not delivered.

Some reviews mention items being out of stock.

Let’s examine a word cloud from review messages.

tmp_anomal.viz.wordcloud('review_comment_message')

Key Observations:

Many words relate to delivery.

Let’s analyze the sentiment of the text.

tmp_anomal.analysis.sentiment('review_comment_message')

Key Observations:

Negative reviews significantly outnumber positive ones, and the boxplot mostly lies below 0.

unavailable

Let’s look at orders with the status ‘unavailable’ by month.

df_orders['order_status'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, custom_mask=df_orders.order_status == 'Unavailable'

, freq='ME'

)

Let’s look at the count of each order status.

tmp_anomal = df_orders[lambda x: x.order_status == 'Unavailable']

(

tmp_anomal[['order_purchase_dt', 'order_approved_dt', 'order_delivered_carrier_dt', 'order_delivered_customer_dt', 'order_estimated_delivery_dt']]

.count()

.to_frame('count')

)

| count | |

|---|---|

| order_purchase_dt | 609 |

| order_approved_dt | 609 |

| order_delivered_carrier_dt | 0 |

| order_delivered_customer_dt | 0 |

| order_estimated_delivery_dt | 609 |

Key Observations:

The process stops after payment approval, before carrier handover.

Let’s look by the customer’s state.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Unavailable'

, pct_diff_threshold=1

, include_columns='tmp_customer_state'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_customer_state | SP | 41746 | 292 | 0.7% | 42.0% | 47.9% | 6.0% |

| tmp_customer_state | PR | 5045 | 40 | 0.8% | 5.1% | 6.6% | 1.5% |

Key Observations:

The proportion of missing values in São Paulo is higher than in the full dataset.

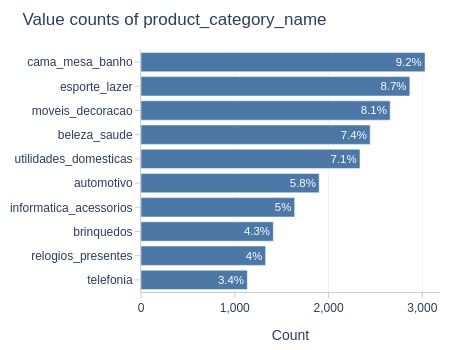

Let’s look by product category.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Unavailable'

, pct_diff_threshold=0

, include_columns='tmp_product_categories'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_product_categories | Missing in Items | 775 | 603 | 77.8% | 0.8% | 99.0% | 98.2% |

Key Observations:

99% of orders lack a category, meaning they are not in the items table.

Let’s look at it broken down by payment type.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Unavailable'

, pct_diff_threshold=0

, include_columns='tmp_payment_types'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_payment_types | Boleto | 19784 | 150 | 0.8% | 19.9% | 24.6% | 4.7% |

| tmp_payment_types | Voucher, Credit Card | 1118 | 9 | 0.8% | 1.1% | 1.5% | 0.4% |

| tmp_payment_types | Credit Card, Voucher | 1127 | 8 | 0.7% | 1.1% | 1.3% | 0.2% |

| tmp_payment_types | Voucher | 1621 | 10 | 0.6% | 1.6% | 1.6% | 0.0% |

Key Observations:

The “boleto” payment type has a slightly higher proportion difference.

Let’s look at it broken down by the average order rating.

df_orders['order_status'].explore.anomalies_by_categories(

custom_mask=df_orders.order_status == 'Unavailable'

, pct_diff_threshold=0

, include_columns='tmp_avg_reviews_score'

)

| Column | Category | Total | Anomaly | Anomaly Rate | Total % | Anomaly % | % Diff |

|---|---|---|---|---|---|---|---|

| tmp_avg_reviews_score | 1 | 11756 | 472 | 4.0% | 11.8% | 77.5% | 65.7% |

| tmp_avg_reviews_score | 2 | 3244 | 46 | 1.4% | 3.3% | 7.6% | 4.3% |

Key Observations:

The difference in proportions is much higher for a rating of 1.

78% of orders with “unavailable” status have a rating of 1.

8% of orders have a rating of 2.

Customers are clearly dissatisfied.

Let’s randomly sample 20 review comments.

We’ll repeat this several times.

tmp_anomal = tmp_anomal.merge(df_reviews, on='order_id', how='left')

messages = (

tmp_anomal['review_comment_message']

.dropna()

.sample(20)

.tolist()

)

display(messages)

['i m waiting until today the product has already completed 30 days of purchase',

'almost a month ago the watch was ordered and paid via boleem if you want to and so far nothing i sent two emails and they didn t even give me satisfaction; oslit stores for me is out lannister without prestige',

'allowed to complete the purchase but did not have the product in stock greater fatigue to get the money back',

'total dissatisfaction with this store sells a product is not delivery tremendous lack of respect',

'i was outraged why it sold',

'i still haven t received the product the deadlines have passed and so far no response now bear the headache to resolve',

'product did not arrive within the time listed',

'in 2 months of waiting i did not receive the product i divided it into two times paid both times and did not receive the product a shame i m going to the procon because baratheon doesn t answer the phone shame',

'i m super dissatisfied i needed the material urgently and i only bought it because lannister stores never had any problems since the supplier doesn t say the same there was no commitment',

'you sold something you didn t have in stock this procedure is problematic my daughter is still waiting for the doll i bought christmas passed and nothing comes the new year tomorrow it s nothing i want the product',

'i didn t receive my product i was already charged in cartaxo',

'shame on such a partner he sold the product confirmed it deducted the amount from the card and now wants to cancel the order because he claims not to have it in stock that s a crime and the stark follows in silence',

'i would not recommend it because i made my purchase made the payment and after a few days i received an email that the partners would not deliver in my region',

'i didn t receive the product',

'i bought a skate through the store and the delivery was scheduled for 05/01/18 until the present date i have not received it',

'didn t give me any satisfaction',

'the scheduled days passed',

'lack of respect for the consumer after everything is finalized they make excuses not to deliver never again the lannister website cannot have a link with this type of seller',

'i am filing a complaint with the procon i can t talk to anyone in this loha',

'deadline for delivery 22/12 two days before the store warned that it does not have the product and does not even know when it will be available so i had to go looking for the skate to deliver for christmas to my grandson i want a refund']

Key Observations:

Review messages indicate orders were not delivered.

Some reviews mention items being out of stock.

Let’s examine a word cloud from review messages.

tmp_anomal.viz.wordcloud('review_comment_message')

Key Observations:

Many words relate to delivery.

Let’s analyze the sentiment of the text.

tmp_anomal.analysis.sentiment('review_comment_message')

Key Observations:

Negative reviews outnumber positive ones, and the boxplot mostly lies below 0.

canceled

Let’s look at orders with the status ‘canceled’ by month.

df_orders['order_status'].explore.anomalies_over_time(

time_column='order_purchase_dt'

, custom_mask=df_orders.order_status == 'Canceled'

, freq='ME'

)

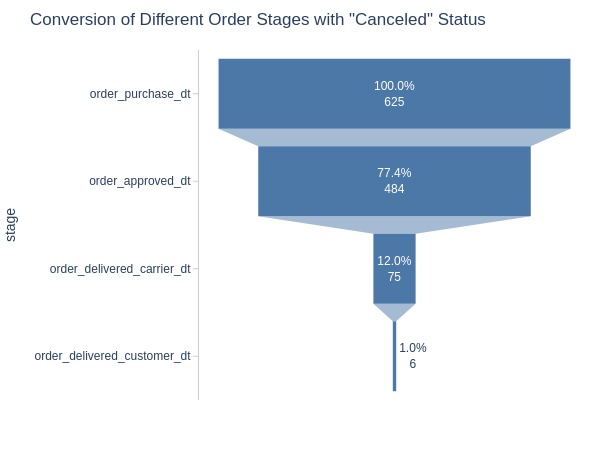

Order cancellation can occur at different stages, so there may be missing values at various points.

Let’s look at the missing values.

tmp_anomal = df_orders[lambda x: x.order_status == 'Canceled']

tmp_anomal.explore.detect_anomalies()

| Count | Percent | |

|---|---|---|

| order_approved_dt | 141 | 22.56% |

| order_delivered_carrier_dt | 550 | 88.00% |

| order_delivered_customer_dt | 619 | 99.04% |

Conversion at different stages

Let’s look at the count of different order status timestamps.

Let’s check if there are any missing values between the dates.

mask = tmp_anomal['order_delivered_carrier_dt'].isna() & tmp_anomal['order_delivered_customer_dt'].notna()

tmp_anomal.loc[mask, 'order_delivered_carrier_dt']

SeriesOn([], Name: order_delivered_carrier_dt, dtype: datetime64[ns])

mask = tmp_anomal['order_approved_dt'].isna() & tmp_anomal['order_delivered_carrier_dt'].notna()

tmp_anomal.loc[mask, 'order_approved_dt']

SeriesOn([], Name: order_approved_dt, dtype: datetime64[ns])

All good.

tmp_funnel = (

tmp_anomal[['order_purchase_dt', 'order_approved_dt', 'order_delivered_carrier_dt', 'order_delivered_customer_dt']]

.count()

.to_frame('count')

.assign(share = lambda x: (x['count']*100 / x['count']['order_purchase_dt']).round(1).astype(str) + '%')

.reset_index(names='stage')

)

px.funnel(

tmp_funnel,

x='count',

y='stage',

text='share',

width=600,

title='Conversion of Different Order Stages with "Canceled" Status'

)

Key Observations:

The process stops at different stages, most often between payment approval and carrier handover.

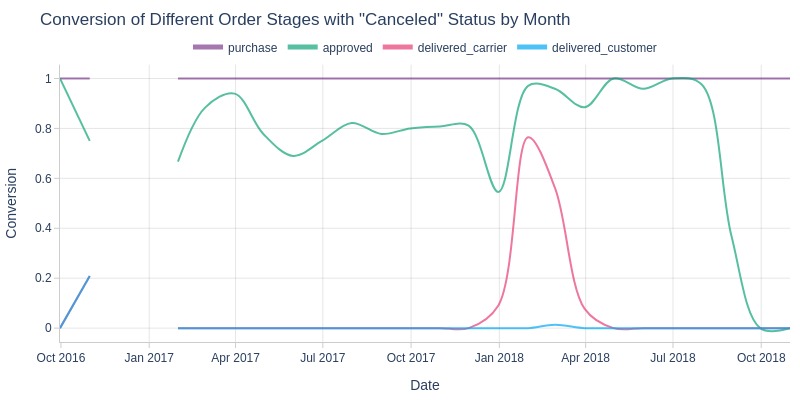

Let’s look at the conversion at each stage by month.

For this, we will count the number of canceled orders with specific timestamps in each period and divide by the number of canceled orders at the time of purchase.

tmp_res_df = (

tmp_anomal.resample('ME', on='order_purchase_dt')

.agg(

purchase = ('order_id', 'count')

, approved = ('order_approved_dt', 'count')

, delivered_carrier = ('order_delivered_carrier_dt', 'count')

, delivered_customer = ('order_delivered_customer_dt', 'count')

)

)

tmp_res_df = tmp_res_df.div(tmp_res_df['purchase'], axis=0)

tmp_res_df = (

tmp_res_df.reset_index(names='date')

.melt(id_vars='date', var_name='date_type', value_name='count')

)

Let’s look at the non-normalized values. That is, divide each value (count with a specific timestamp) by the total value for the period.

labels = dict(

date = 'Date',

date_type = 'Date Type',

count = 'Conversion'

)

tmp_res_df.viz.line(

x='date'

, y='count'

, color='date_type'

, labels=labels

, title='Conversion of Different Order Stages with "Canceled" Status by Month'

)

Key Observations:

Canceled orders almost never have delivery timestamps, which is logical.

From December 2017 to March 2018, there was a significant spike in canceled orders that had carrier handover timestamps but no delivery timestamps, indicating delivery issues during this period.

About 80% of canceled orders have payment approval timestamps, but this proportion increased significantly starting January 2018, approaching 100%.

Number of Last Stages

Let’s look at the last stage to which orders with the status ‘canceled’ reach over time.

For this:

transform the wide table into a long one, making the name of the time variable a category;

remove missing values in the time (this will be the variable with the value after melt);

convert these categories into a categorical type in pandas and specify the order;

group by order;

take the first time in each group (all entries in the group will have the same time);

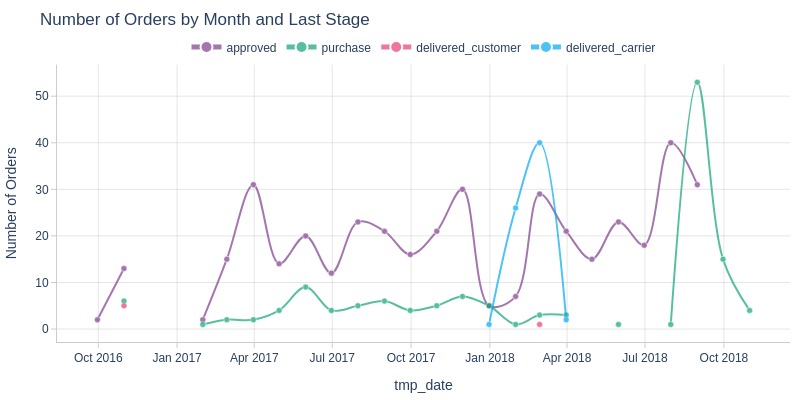

take the maximum stage (since we specified the order, this will be the last stage of the order).